A Deep Dive into Google's "Agents" White Paper: Hype or Revolution?

Video

Google's recent white paper on "Agents" has created quite a buzz.

The paper explores the concept of AI agents and delves into their architecture and potential. Let's break down what this white paper offers, its key takeaways, and some areas where it could improve.

The Marketing Angle: A Platform-Centric View?

At first glance, the white paper feels like a marketing tool for Google's Vertex AI. And that's perfectly fine—after all, companies often use such publications to showcase their platforms.

However, adopting a more platform-agnostic approach could have made the paper more universally applicable.

For instance, many examples in the white paper are tied to Vertex AI-specific features, which might be unfamiliar to those using other agentic frameworks.

Additionally, certain concepts, like extensions, are introduced but not elaborated on in sufficient detail, leaving room for better documentation and clarity.

Despite these limitations, the paper provides a solid starting point for understanding agents. Let’s dive into the key concepts.

What is an Agent?

Google defines an agent as:

"An application that attempts to achieve a goal by observing the world and acting upon it using the tools at its disposal."

This definition is both simple and powerful. It captures the essence of what an agent is without overcomplicating things. While many experts on platforms like LinkedIn and YouTube often layer terms like reasoning, context awareness, and more onto the definition, the core idea remains straightforward.

Interestingly, my personal favorite definition comes from Hugging Face, which describes AI agents as:

"Programs where LLM outputs control the workflows."

This succinctly highlights the operational dynamics of agents, especially when integrated with language models.

The Agentic Architecture: Core Components

The white paper also details the architecture of agents, a topic I’ve previously discussed on my channel. Here's a simplified breakdown of the three primary components that define an agentic system:

1. The Model

At the heart of any agent lies a language model. This serves as the foundation for the agent's intelligence and capabilities. Trained on extensive datasets, the model enables the agent to comprehend language, process instructions, and provide knowledge.

In an agentic framework, the model is not just a passive responder. Its capabilities drive the decision-making processes within the orchestration layer.

2. The Tools

Tools are what set agents apart from simple LLM calls. Since LLMs are inherently limited—they can’t interact with external systems or access real-time information—tools extend their capabilities.

Agents use tools to interact with the external world, making them more dynamic and useful. Frameworks like LangChain and LlamaIndex exemplify how tools can augment the performance of agentic systems, enabling them to achieve their goals effectively.

3. The Orchestration Layer

Often referred to as the reasoning loop, this layer governs the agent's ability to:

Plan: Decide the next steps in a workflow.

Reason: Analyze the gathered information.

Execute: Take action based on the plan.

This iterative process is the backbone of an agent’s functionality, ensuring it can adapt and respond intelligently to various scenarios.

Tools (Key Takeaways)

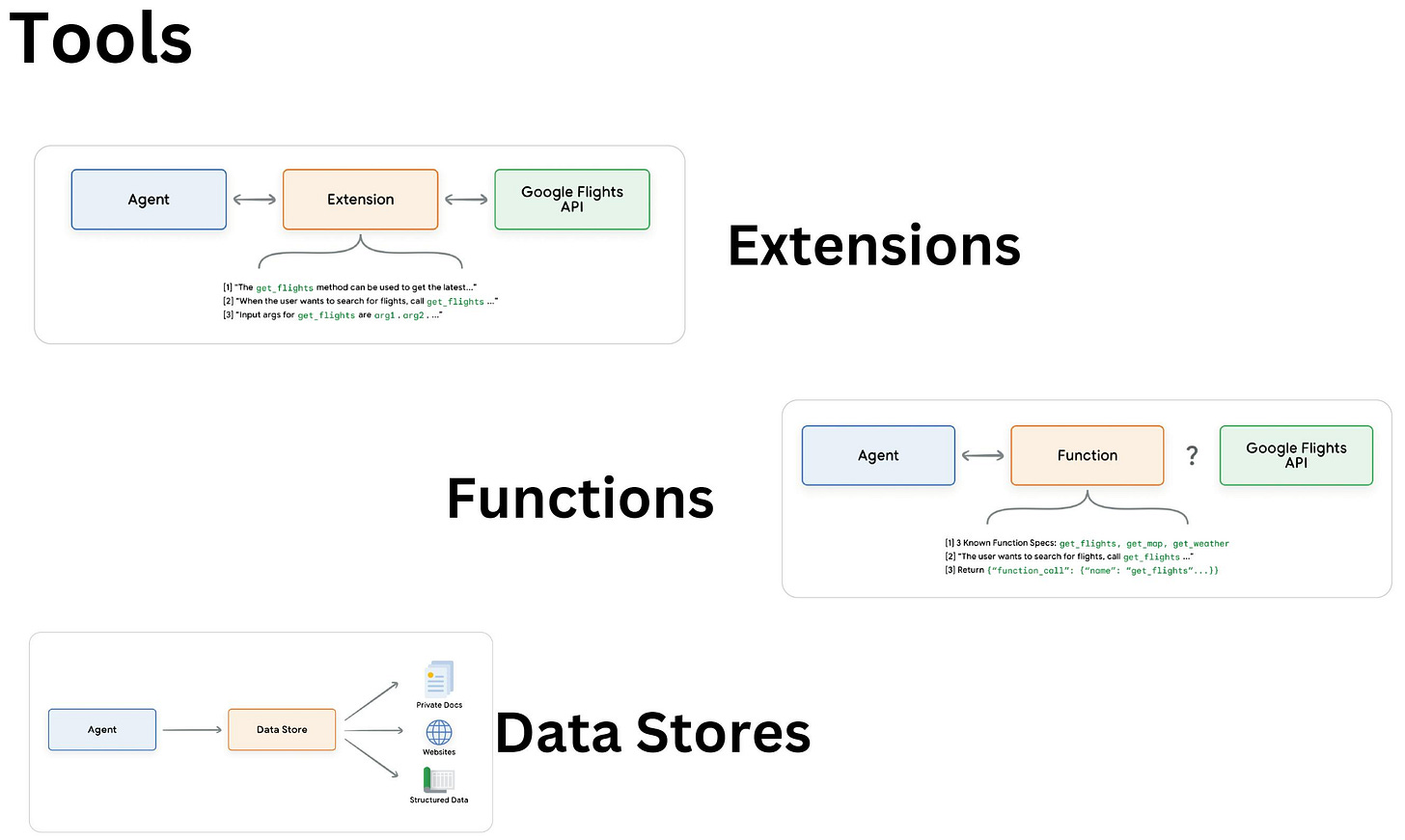

Extensions

Definition: Extensions are interfaces that bridge the gap between APIs and agents. They allow for seamless API execution by teaching agents how to use APIs via examples.

Use Case: Extensions are ideal for scenarios where the agent needs to dynamically interact with APIs like booking flights or fetching weather data. They reduce ambiguity in API calls by guiding the agent with context and examples.

Your Example: Your custom tool implementation fits here because it defines tools with a name and description, guiding the LLM to invoke the correct tool and arguments based on context.

Functions

Definition: Functions are reusable logic modules that allow developers to define behavior and handle specific tasks.

Difference: Unlike extensions, functions offload API execution to client-side logic or middleware, especially in cases where security or authentication constraints prevent direct calls from the LLM.

Your Observations: Google's distinction clarifies that functions give developers fine-grained control and decouple execution from the agent, making iteration easier without redeploying infrastructure.