A Glimpse Into the Future of Software Engineering

Video

These two posts—just a few lines of text by two influential voices in the tech space—have ignited a storm of conversations.

My social media feeds are buzzing—filled with commentary, debates, and threads that seem to extend endlessly. And the chatter isn’t dying down; in fact, it’s only growing louder.

What’s clear is this: They’re an indication, a signal—an alarm —that something has fundamentally shifted in the software industry.

In a recent LinkedIn post, Amazon CEO Andy Jassy dropped a bombshell: Amazon Q, their generative AI assistant, has dramatically redefined what’s possible in software development.

Take this: what used to take 50 developer days to upgrade applications to Java 17 now takes mere hours. The equivalent of 4,500 developer-years of work saved—just like that.

Amazon upgraded more than half of its production Java systems in less than six months. A process that usually demands extensive time and resources was completed in a fraction of the time, at a fraction of the cost.

And the most striking part? 79% of the auto-generated code reviews were shipped by developers without any additional tweaks.

The AI didn’t just assist—it delivered.

And it’s not just the corporate giants making waves. Influential voices in AI research are also weighing in on the transformative power of AI in coding.

In a recent tweet, AI researcher Andrej Karpathy shared his experience with AI-assisted programming. He found these tools so effective that they’ve completely reshaped his coding workflow.

Karpathy describes a new way of coding—what he calls "half-coding." He writes prompts in plain English, reviews the AI-generated code diffs, and lets the AI handle the heavy lifting, completing substantial portions of code in record time.

Karpathy says - he can’t imagine going back to the way things were.

And neither can I. I’ve been using these code generators for over a year now and my productivity has positively increased.

Coding is Undergoing a Seismic Shift

So let’s start with a fact: coding is about to or is already undergoing a seismic shift.

While I was researching about this topic, I stumbled across Russell Kaplan's thread on x.com which resonated well with me.

It’s a fascinating thread - So I will break down some of the tweets that were thought-provoking.

Here’s why this matters. Research labs around the world are pouring resources into making AI models better at coding and reasoning. This isn’t just incremental progress—it’s a massive leap forward. These models are being trained to write code, reason through problems, and improve themselves in ways we haven’t seen before.

Why coding? What makes it so special?

The answer lies in the unique advantage coding offers: it’s a domain where AI can learn through “self-play.”

Unlike other fields where data is limited by human expertise, code can be tested, tweaked, and optimized automatically. It’s a playground for AI, where models can write code, run it, and check for consistency—all without human intervention. This kind of automatic supervision is not just beneficial; it’s revolutionary.

But what is self-play?

To understand, self-play - lets learn about the model that pioneered the concept itself.

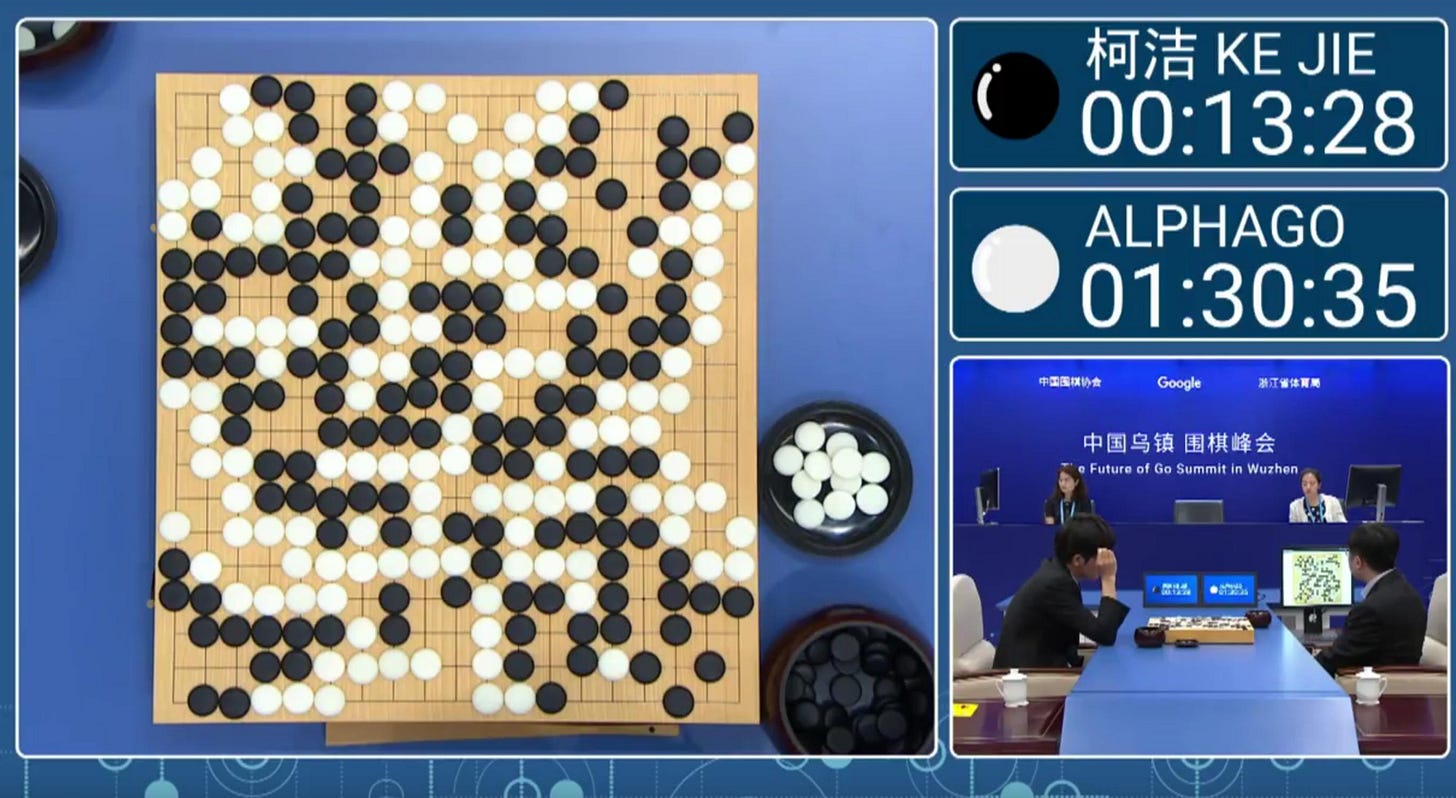

AlphaGo, developed by DeepMind, is a groundbreaking AI that made history by defeating human world champions in the ancient game of Go.

One of its key innovations was the use of self-play, where AlphaGo played countless games against versions of itself.

This technique allowed the AI to continuously improve, discovering new strategies and refining its decision-making process without human input.

Fascinating isn’t it.

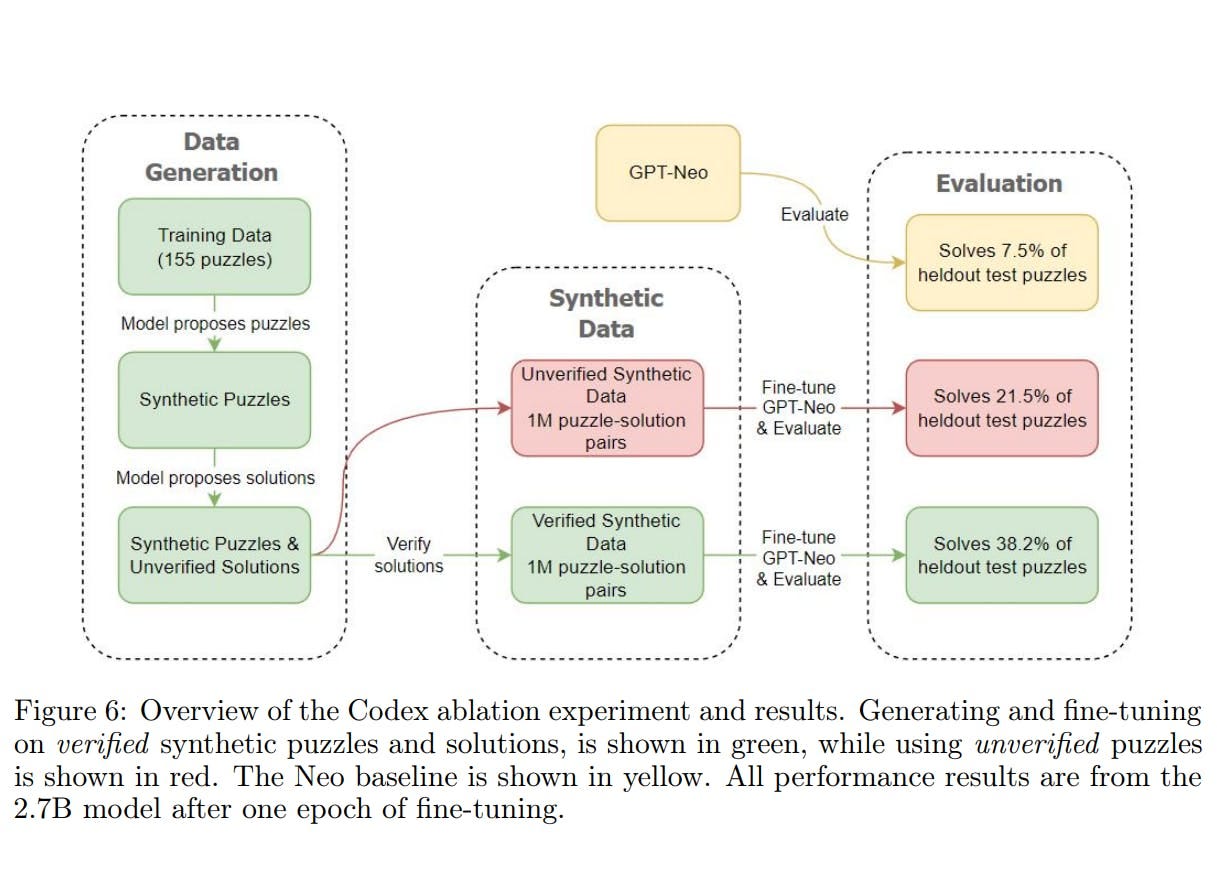

Using this as an inspiration, researcher’s from Microsoft and MIT, in the paper “Language Models Can Teach Themselves to Program Better” demonstrated an answer to the question

“Can an LM design its own programming problems to improve its problemsolving ability?

Rather than using English problem descriptions which are ambiguous and hard to verify, they generated puzzles.

Self-play using programming puzzles

Here’s how they built the pipeline.

Puzzle Generation: The language model generates new puzzles by sampling from the training set, combining them, and creating additional puzzles within its context window. These puzzles are then filtered for syntactic validity and to exclude trivial solutions.

Solution Generation: The model attempts to solve the valid puzzles using a few-shot learning strategy, with a predetermined number of attempts per puzzle.

Solution Verification: Generated solutions are verified using a Python interpreter. From these, up to m correct and concise solutions are selected for each puzzle.

Fine-Tuning: The model is fine-tuned on the selected puzzle-solution pairs.

The result?

The diagram illustrates how the iterative process of generating, verifying, and fine-tuning on synthetic data significantly improves the performance of the language model in solving puzzles.

Initial Evaluation: Without any fine-tuning, GPT-Neo solves 7.5% of the held-out test puzzles.

Evaluation After Fine-Tuning on Unverified Data: After fine-tuning on the unverified synthetic data, the model's performance improves, solving 21.5% of the held-out test puzzles.

Evaluation After Fine-Tuning on Verified Data: Fine-tuning on the verified synthetic data further enhances the model's performance, allowing it to solve 38.2% of the held-out test puzzles.

Now, let’s look ahead.

In just a few years, software engineering will be almost unrecognizable. Imagine having an army of coding agents at your disposal—each one capable of handling tasks from start to finish. This isn’t science fiction; it’s where we’re headed.

Engineers will transition from writing lines of code to managing these agents, overseeing the architecture of systems, and making high-level decisions.

This shift will redefine the role of the software engineer. In this new world, coding becomes less about the syntax and more about the strategy—understanding what needs to be built and why.

It’s like moving from being a craftsman to a project manager, where your focus is on the bigger picture.

To extend this idea, I return to Andrej Karpathy’s vision of AI resembling an operating system—essentially, a powerful agent. Andrej describes this as an entity with more knowledge than any single human on all subjects.

Now, imagine this as a specialized agent focused on a specific domain or business use case.

It’s possible, and we may not be very far from it.

Russel calls this “Software Abundance”

As coding becomes 10 times more accessible, we’ll see a proliferation of what can be called “single-use software”—apps and websites designed for specific, often one-off purposes.

This abundance won’t just democratize software creation; it will fundamentally change how we think about software itself. Imagine tools being created for specific events, or small businesses commissioning custom apps for limited-time campaigns.

What was once impractical will become routine.

As this new reality unfolds, the role of the software engineer will continue to evolve. Just as engineers transitioned from assembly language to high-level languages like Python, they will adapt to a world where English, rather than code, becomes the primary tool of communication.

This change will require a shift in mindset—from focusing on how to write code, to understanding what needs to be accomplished.

The job of a software engineer will become more about defining problems and architecting solutions, with coding agents handling the execution.

Conclusion

We’re on the brink of a transformation that will not only change how we code but also how we think about building software altogether.

And what can help navigate the ambiguities during this phase is Adaptability.

If you’re as excited about this future as I am, hit the subscribe button to stay adaptable to the new possibilities.

Leave a comment below—how do you think AI will change the way you work or build?