Building a Multi-Agent Orchestrator: A Step-by-Step Guide

If you prefer watching a video.

Today, we’re diving into an exciting project: creating a Multi-Agent Orchestrator.

This post is an extension of my earlier guide, "Building an AI Agent from Scratch."

If you’re new here, I recommend revisiting that post to get up to speed, as we’ll build upon its concepts and code.

In this project, we’ll tackle orchestrating actions between multiple agents, enabling seamless execution of tasks such as fetching weather information and the current time. Let’s jump in!

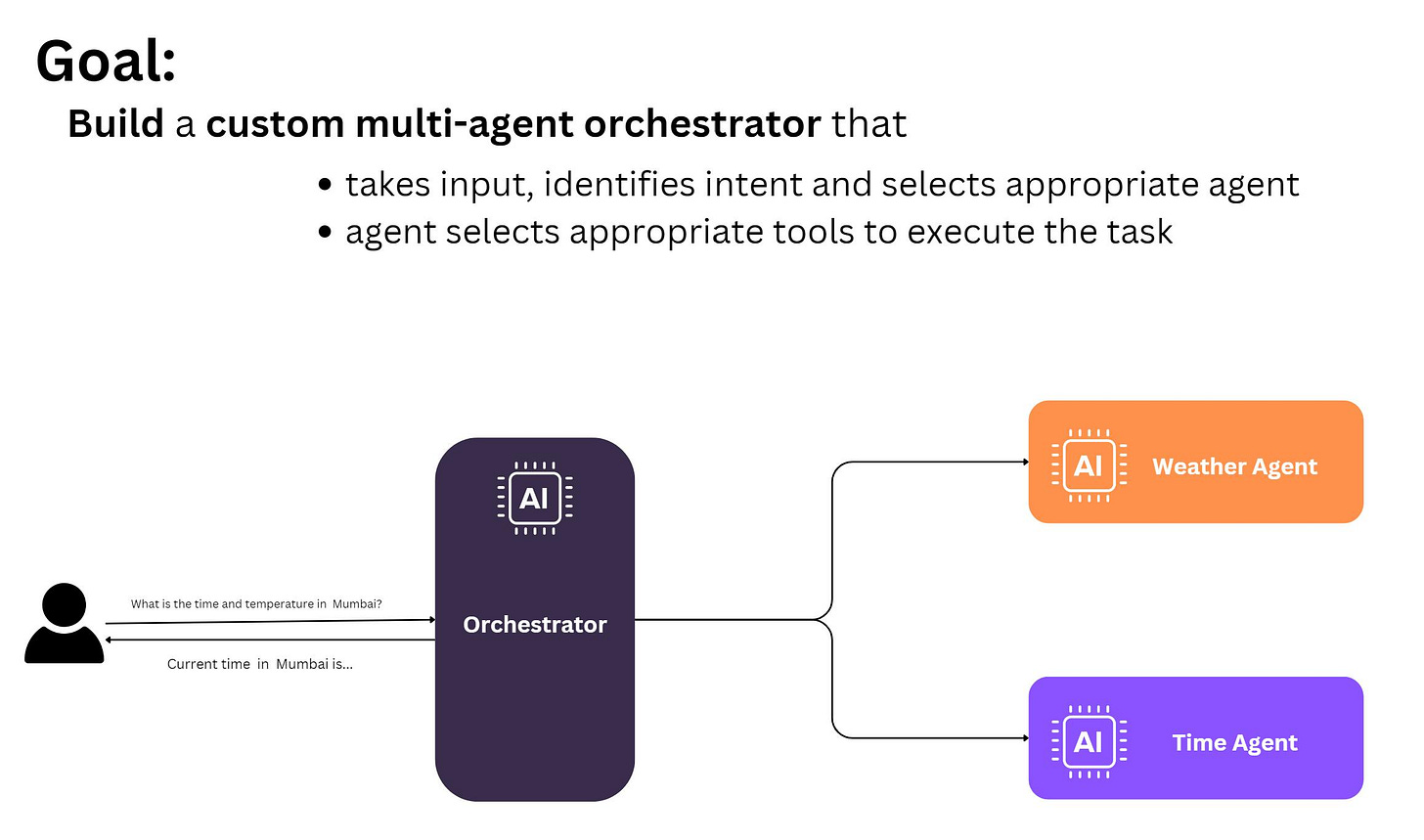

What Is a Multi-Agent Orchestrator?

A Multi-Agent Orchestrator is a system that:

Identifies the intent of a user’s input.

Selects the appropriate agent to handle the request.

Executes tasks using tools associated with the agent.

Think of it as a manager assigning tasks to specialized team members. This orchestration ensures complex queries involving multiple tasks are handled efficiently.

What We’ll Build

We’ll create:

Agents: Specialized entities to handle tasks like fetching weather or time.

Tools: Functional utilities for the agents, such as APIs or database queries.

Orchestrator: The central system managing task delegation and execution.

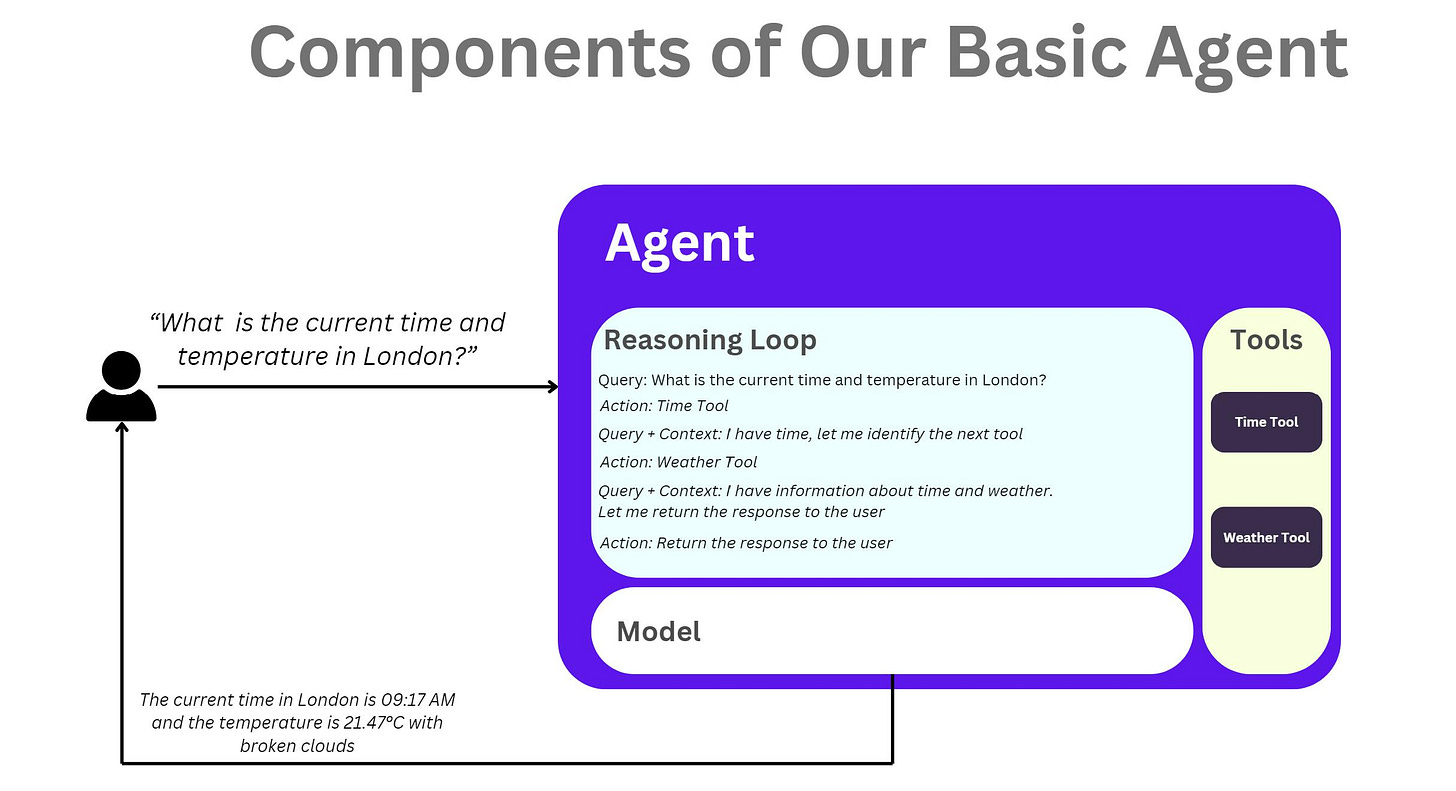

Key Components of an Agent

An agent has three main components:

Reasoning Loop: Decides the next action based on context.

Model: Uses a language model (LLM) for decision-making.

Tools: A list of utilities to perform specific tasks.

Our agents will dynamically decide which tool to use, making them highly adaptable.

The Agent Class

Here’s a high-level breakdown of the Agent class:

Constructor: Initializes the agent with a name, description, tools, and an LLM model.

Process Input: Takes user input, decides on a tool, and executes the task.

Prompting: Constructs a prompt for the LLM to guide decision-making.

Agents also handle parsing JSON responses from the LLM to ensure smooth execution.

from abc import ABC, abstractmethod

import ast

import os

import requests

from llm.llm_ops import query_llm

from tools.base_tool import Tool

import json

class Agent:

def __init__(self, Name: str, Description: str, Tools: list, Model: str):

self.memory = []

self.name = Name

self.description = Description

self.tools = Tools

self.model = Model

self.max_memory = 10

def json_parser(self, input_string):

print(type(input_string))

python_dict = ast.literal_eval(input_string)

json_string = json.dumps(python_dict)

json_dict = json.loads(json_string)

if isinstance(json_dict, dict) or isinstance(json_dict,list):

return json_dict

raise "Invalid JSON response"

def process_input(self, user_input):

self.memory.append(f"User: {user_input}")

12

context = "\n".join(self.memory)

tool_descriptions = "\n".join([f"- {tool.name()}: {tool.description()}" for tool in self.tools])

response_format = {"action":"", "args":""}

prompt = f"""Context:

{context}

Available tools:

{tool_descriptions}

Based on the user's input and context, decide if you should use a tool or respond directly.

If you identify a action, respond with the tool name and the arguments for the tool.

If you decide to respond directly to the user then make the action "respond_to_user" with args as your response in the following format.

Response Format:

{response_format}

"""

response = query_llm(prompt)

self.memory.append(f"Agent: {response}")

response_dict = self.json_parser(response)

# Check if any tool can handle the input

for tool in self.tools:

if tool.name().lower() == response_dict["action"].lower():

return tool.use(response_dict["args"])

return response_dict

The Orchestrator

The orchestrator coordinates multiple agents:

Accepts user input.

Selects the right agent based on the intent.

Manages task execution, including cases where multiple tasks are requested.

Core Features of the Orchestrator:

Maintains context by storing user queries, agent responses, and intermediate results.

Uses a reasoning loop to determine the next steps.

Constructs prompts to guide the LLM in selecting the right agent and tools.

import ast

import json

from llm.llm_ops import query_llm

from agents.base_agent import Agent

from logger import log_message

class AgentOrchestrator:

def __init__(self, agents: list[Agent]):

self.agents = agents

self.memory = [] # Stores the reasoning and action steps taken

self.max_memory = 10

def json_parser(self, input_string):

print(type(input_string))

python_dict = ast.literal_eval(input_string)

json_string = json.dumps(python_dict)

json_dict = json.loads(json_string)

if isinstance(json_dict, dict) or isinstance(json_dict,list):

return json_dict

raise "Invalid JSON response"

def orchestrate_task(self, user_input: str):

self.memory = self.memory[-self.max_memory:]

context = "\n".join(self.memory)

print(f"Context: {context}")

response_format = {"action":"", "input":"", "next_action":""}

def get_prompt(user_input):

return f"""

Use the context from memory to plan next steps.

Context:

{context}

You are an expert intent classifier.

You need will use the context provided and the user's input to classify the intent select the appropriate agent.

You will rewrite the input for the agent so that the agent can efficiently execute the task.

Here are the available agents and their descriptions:

{", ".join([f"- {agent.name}: {agent.description}" for agent in self.agents])}

User Input:

{user_input}

###Guidelines###

- Sometimes you might have to use multiple agent's to solve user's input. You have to do that in a loop.

- The original userinput could have multiple tasks, you will use the context to understand the previous actions taken and the next steps you should take.

- Read the context, take your time to understand, see if there were many tasks and if you executed them all

- If there are no actions to be taken, then make the action "respond_to_user" with your final thoughts combining all previous responses as input.

- Respond with "respond_to_user" only when there are no agents to select from or there is no next_action

- You will return the agent name in the form of {response_format}

- Always return valid JSON like {response_format} and nothing else.

"""

response = ""

loop_count = 0

self.memory = self.memory[-10:]

prompt = get_prompt(user_input)

llm_response = query_llm(prompt)

llm_response = self.json_parser(llm_response)

print(f"LLM Response: {llm_response}")

self.memory.append(f"Orchestrator: {llm_response}")

action= llm_response["action"]

user_input = llm_response["input"]

print(f"Action identified by LLM: {action}")

if action == "respond_to_user":

return llm_response

for agent in self.agents:

if agent.name == action:

print("*******************Found Agent Name*******************************")

agent_response = agent.process_input(user_input)

print(f"{action} response: {agent_response}")

self.memory.append(f"Agent Response for Task: {agent_response}")

print(self.memory)

return agent_response

def run(self):

print("LLM Agent: Hello! How can I assist you today?")

user_input = input("You: ")

self.memory.append(f"User: {user_input}")

while True:

if user_input.lower() in ["exit", "bye", "close"]:

print("See you later!")

break

response = self.orchestrate_task(user_input)

print(f"Final response of orchestrator {response}")

if isinstance(response, dict) and response["action"] == "respond_to_user":

log_message(f"Reponse from Agent: {response["input"]}", "RESPONSE")

user_input = input("You: ")

self.memory.append(f"User: {user_input}")

elif response == "No action or agent needed":

print("Reponse from Agent: ", response)

user_input = input("You: ")

else:

user_input = response

Tools in Action

Agents use tools to perform tasks. For example:

Weather Tool: Fetches real-time weather data from OpenWeatherMap.

Time Tool: Determines the local time for a given city, even without a timezone.

Each tool includes:

A name and description to guide the LLM.

A use method to perform the task.

import os

import requests

from tools.base_tool import Tool

class WeatherTool(Tool):

def name(self):

return "Weather Tool"

def description(self):

return "Provides weather information for a given location. The payload is just the location. Example: New York"

def use(self, location:str):

api_key = os.getenv("OPENWEATHERMAP_API_KEY")

url = f"http://api.openweathermap.org/data/2.5/weather?q={location}&appid={api_key}&units=metric"

response = requests.get(url)

data = response.json()

if data["cod"] == 200:

temp = data["main"]["temp"]

description = data["weather"][0]["description"]

response = f"The weather in {location} is currently {description} with a temperature of {temp}°C."

print(response)

return response

else:

return f"Sorry, I couldn't find weather information for {location}."

Demo: Running the Orchestrator

Here’s a quick demonstration:

Query: “What’s the weather in Bangalore, and what’s the current time?”

Execution:

The orchestrator identifies the intent (weather and time).

Delegates tasks to the respective agents.

Combines responses to provide the final answer.

Example Output:

"The weather in Bangalore is misty with a temperature of 22°C. The current time in Bangalore is 12:27 AM."

from agents.base_agent import Agent

from tools.weather_tool import WeatherTool

from tools.time_tool import TimeTool

from orchestrator import AgentOrchestrator

from dotenv import load_dotenv

import os

# Load environment variables from .env file

load_dotenv()

# Create Weather Agent

weather_agent = Agent(

Name="Weather Agent",

Description="Provides weather information for a given location",

Tools=[WeatherTool()],

Model="gpt-4o-mini"

)

# Create Time Agent

time_agent = Agent(

Name="Time Agent",

Description="Provides the current time for a given city",

Tools=[TimeTool()],

Model="gpt-4o-mini"

)

# Create AgentOrchestrator

agent_orchestrator = AgentOrchestrator([weather_agent, time_agent])

# Run the orchestrator

agent_orchestrator.run()

What’s Next?

This orchestrator is just the beginning. You can:

Add more agents for tasks like translation, currency conversion, or database queries.

Optimize prompts for better LLM responses.

Extend the system for real-world applications like customer support or smart assistants.

Full Code

https://github.com/zahere-dev/augmate

Final Thoughts

Building a Multi-Agent Orchestrator showcases the power of combining LLMs with task-specific agents. By modularizing tasks and leveraging context effectively, you can create systems that are both scalable and intelligent.

Stay tuned for more updates, and feel free to share your thoughts or ask questions in the comments below. Don’t forget to check out the accompanying video for a detailed walkthrough of the code.

Happy coding! 🚀