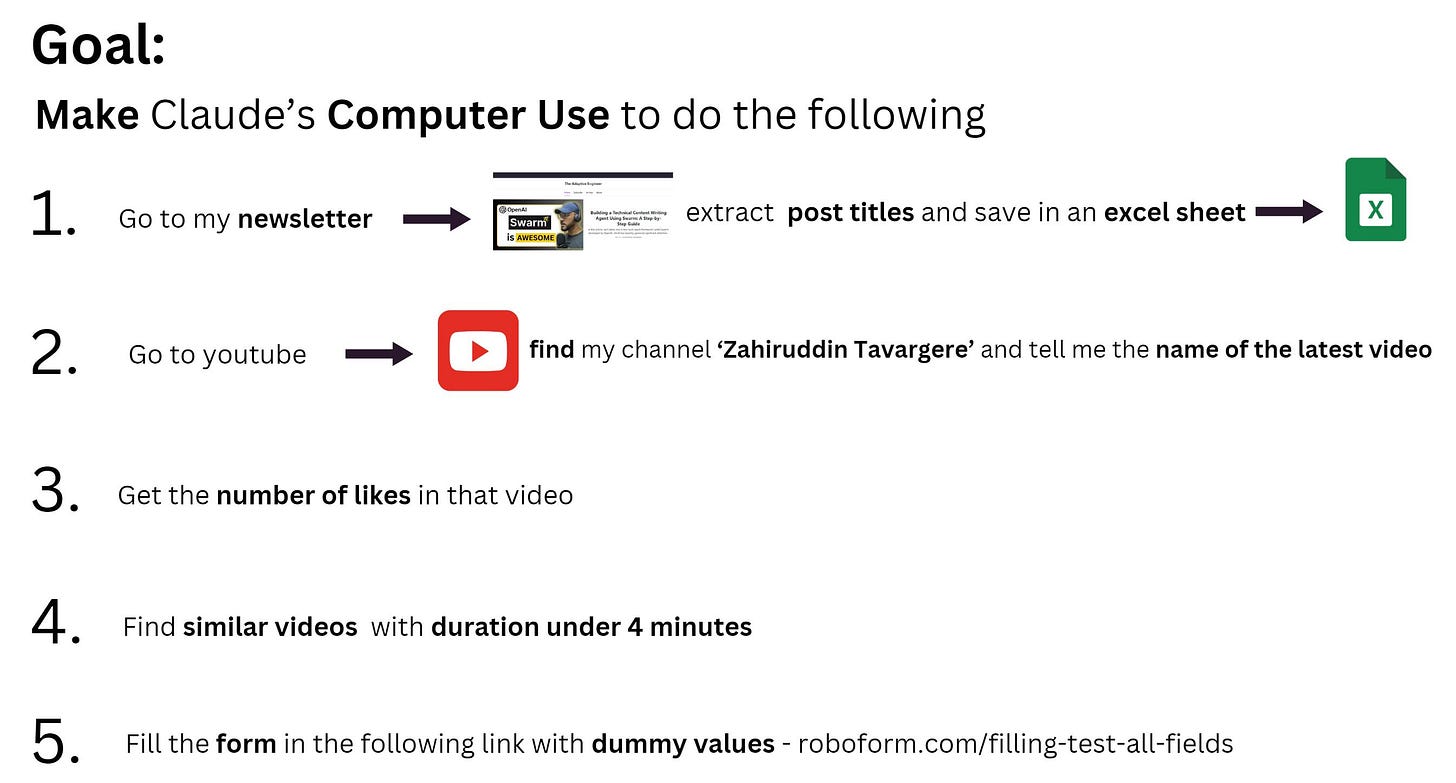

Claude's 'Computer Use' Put to the Test: Insights from 5 Challenges I Gave it

Today, I set out to challenge Claude’s 'Computer Use' with five specific tasks, each designed to evaluate its precision, adaptability, and overall functionality.

Video

What is Claude’s ‘Computer Use’?

Claude’s Sonnet 3.5 can now interact with the OS. It can perform task like you would - Browsing websites using a Web Browser, using excel sheet to fill data, etc.

The goal of this Article

Installation

A detailed explanation of running the code is available in this repo.

anthropic-quickstarts/computer-use-demo at main · anthropics/anthropic-quickstarts

We need a Linux distribution to run ‘Computer Use’ in an isolated environment (Docker).

To install in Linux subsystem on Windows, run the below command in CMD.

Follow this link for more details.

wsl --install

You will need a anthropic API key. You can get that from here.

Start docker and run the the below snippet in CMD/bash/PowerShell

SET ANTHROPIC_API_KEY=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

docker run ^

-e ANTHROPIC_API_KEY=%ANTHROPIC_API_KEY% ^

-v %USERPROFILE%\.anthropic:/home/computeruse/.anthropic ^

-p 5900:5900 ^

-p 8501:8501 ^

-p 6080:6080 ^

-p 8080:8080 ^

-it ghcr.io/anthropics/anthropic-quickstarts:computer-use-demo-latest

After all the installations, you should see the agent running in localhost:8080

Challenge 1: Extracting Article Titles from My Newsletter

The first task aimed to test the agent's ability to interact with a webpage and extract data. I provided the agent with a URL to my newsletter, asking it to capture all article titles and store them in an Excel sheet. This sounds simple, but it required the agent to navigate a few hurdles:

Navigating the Website: The agent successfully opened the URL in Firefox and accessed the page.

Handling a Subscription Page: It encountered a subscription page and asked for input on how to proceed. After selecting the public archive, it moved forward.

Using RSS Feeds: The agent smartly identified an RSS feed on the page and used it to extract article titles.

The task was completed, and the agent saved around 20 articles in an Excel file. While it wasn’t a straightforward front-end automation use case, the agent demonstrated adaptability in handling unexpected scenarios.

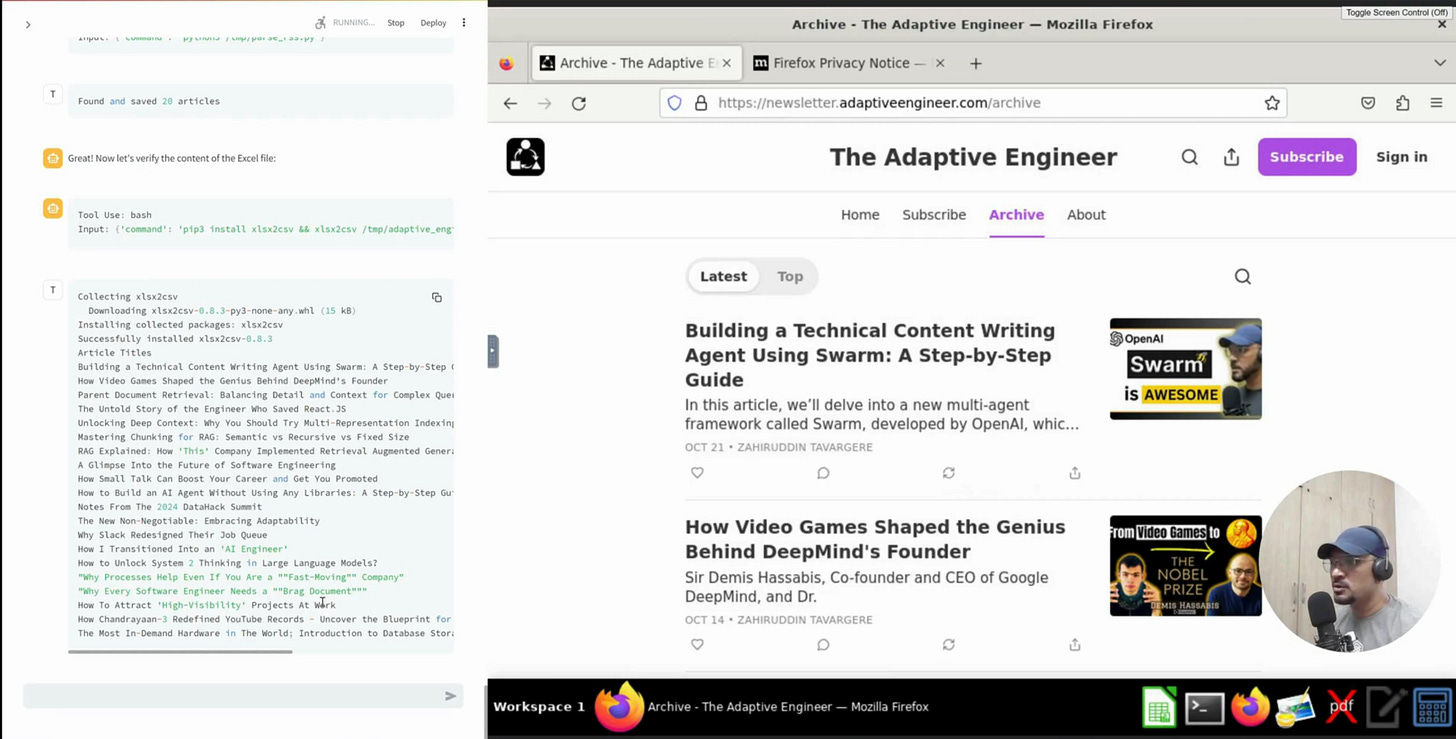

Challenge 2: Finding the Latest Video on My YouTube Channel

Next, I asked the agent to find the latest video from my YouTube channel. Here’s how it went:

Channel Search: The agent navigated to YouTube and searched for my channel, "Zahiruddin Tavergere."

Fetching the Latest Video: It successfully identified my latest video titled "I Created a Blogging Agent in 5 Minutes Using OpenAI's Swarm".

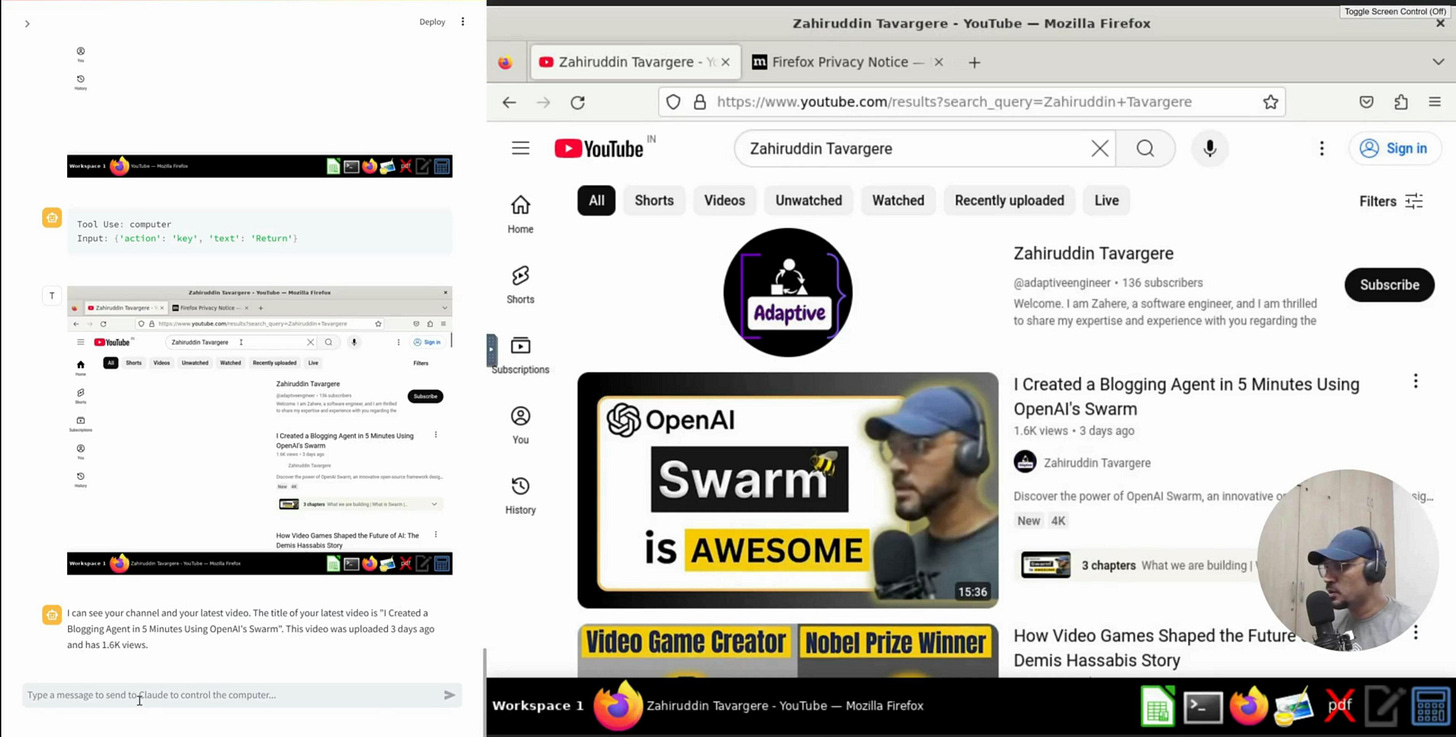

Challenge 3: Find the number of likes in the video (from Task 2)

Extracting Likes: I then asked the agent to find the number of likes on that video, and it efficiently navigated the page to retrieve this data.

The agent performed well here, understanding my commands even when they weren't perfectly specific. This task demonstrated its ability to interpret and execute instructions with some degree of context awareness.

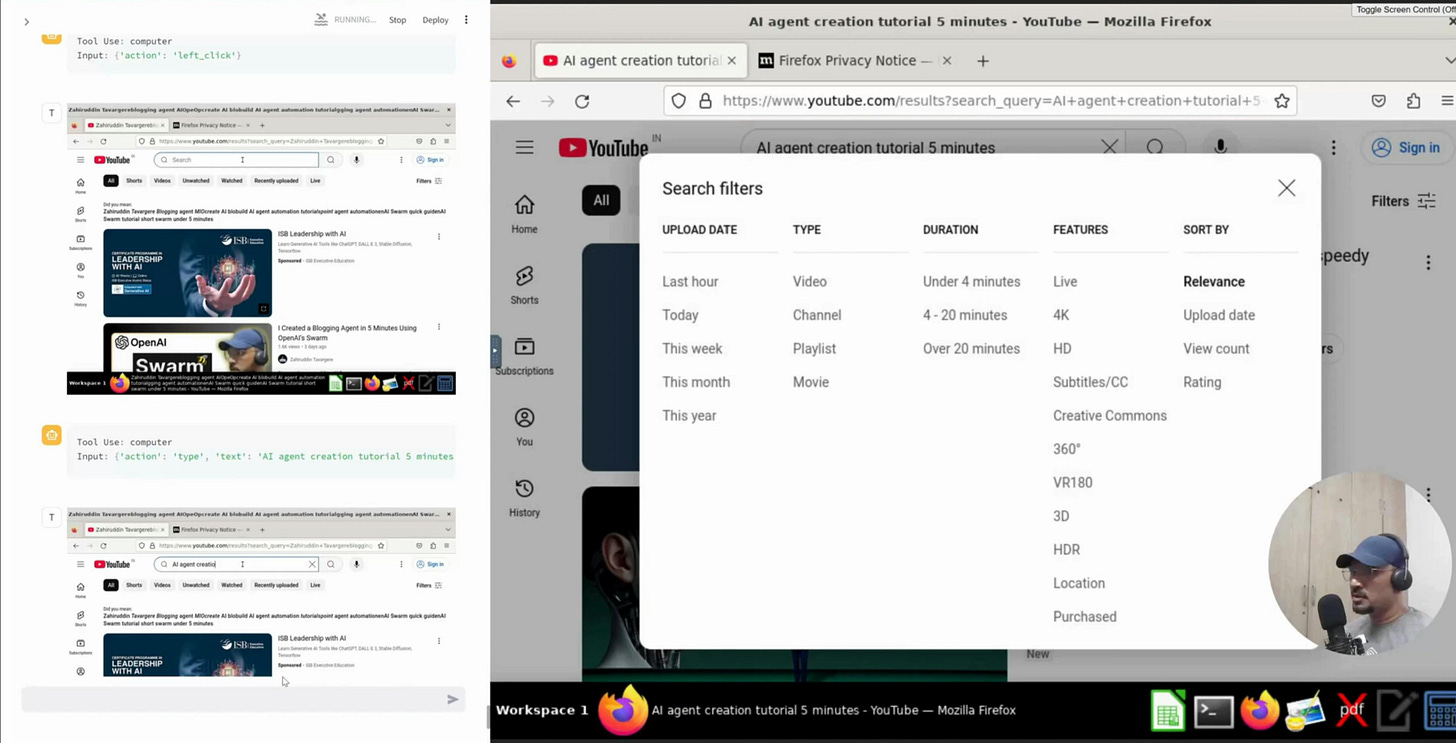

Challenge 4: Finding Similar Videos under 4 Minutes

This task was more complex and required the agent to not only search for videos but also filter results by duration. Here’s the breakdown:

Understanding the Prompt: I asked the agent to find videos similar to my latest one, but with a duration of under 4 minutes.

Using Filters: The agent used the search bar to enter the query, applied a duration filter, and selected the "Under 4 minutes" option.

Evaluating Results: It was able to find a few similar videos, although some of the results didn’t exactly match the duration criteria.

This task highlighted the potential for LLMs to automate more complex workflows by combining search, filters, and contextual understanding, making them highly useful in automation scenarios.

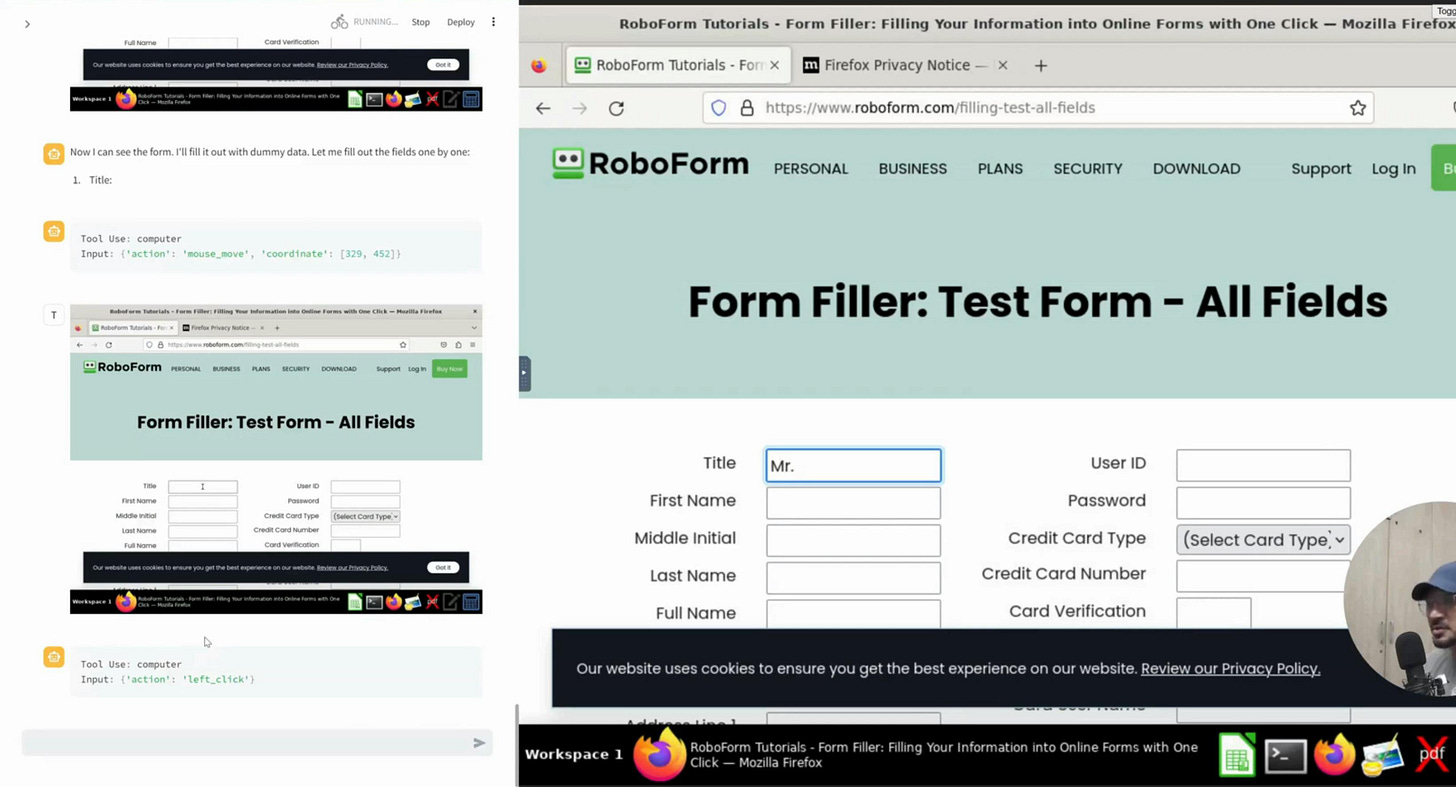

Challenge 5: Automating Form Filling

One of the most common use cases in the RPA (Robotic Process Automation) industry is automating form filling. Here’s how the agent performed:

Accessing the Form: I provided a URL to a dummy form from RoboForm, a password manager.

Filling in Data: The agent quickly identified the form fields and fill, first name, and last name.

Handling Errors: Midway through, I encountered some technical issues and rate limits, which required restarting the application.

This task demonstrated the potential of using LLMs for repetitive tasks like filling out forms, which could significantly save time in enterprise environments.

The Bigger Picture: Andrej Karpathy's Vision of LLMs and Automation

Reflecting on these experiments, I couldn’t help but think about Andrej Karpathy's vision for the future of LLMs—essentially envisioning an "LLMOS" (Large Language Model Operating System).

Imagine an LLM that can access OS-level functions, with unlimited memory and a singular focus on completing specific tasks. Here’s what that could look like in practice:

Intelligent Decision-Making: LLMs trained on specific datasets could make small decisions autonomously, handling routine tasks without human intervention.

Enterprise Integration: In a business setting, this could mean automating tasks like customer support, form filling, or data entry, making processes more efficient.

This vision excites me as someone who has been in the automation space for a long time, and it shows how the lines between front-end automation and intelligent decision-making are beginning to blur.

Conclusion: The Future of Front-End Automation with LLMs

These experiments were a glimpse into the future of AI-driven automation. From extracting data to interacting with websites and filling out forms, LLMs are proving to be versatile tools.

While Claude’s ‘Computer Use’ has challenges like rate limits and technical hiccups, the potential for transforming enterprise automation is immense.

If you found this exploration interesting and want to learn more about the intersection of AI and automation, don’t forget to subscribe to my YouTube channel. I regularly share insights and tutorials on how to leverage AI tools effectively.

See you in the next post!