How Chandrayaan-3 Redefined YouTube Records - Uncover the Blueprint for Crafting Your Ultimate Live Video Streaming Service!

Read Time: 5 mins 55 secs (Know that your time is invaluable to me!)

Hey friend!

Today we’ll be talking about

Chandrayan-3 Landing Broke Youtube Record - Let’s Learn To Design A Live Video Streaming Service

The Context

Top 3 Most Viewed Live Streams on YouTube

Global Record For Concurrent Live Stream Views

Functional Requirements

Non-functional Requirements

Challenges

Live Streaming Service for a Single User

Designing For More Than 2 Crore Concurrent Users

How Chandrayaan-3 Redefined YouTube Records - Uncover the Blueprint for Crafting Your Ultimate Live Video Streaming Service!

The Context

On August 23, 2023, a historic moment unfolded as the Indian Space Research Organisation (ISRO) shattered records on YouTube for the most-watched live event.

The live stream of the Chandrayaan-3 lunar landing captured the attention of millions worldwide, amassing an astounding 8 million concurrent viewers and cementing its position as the most-viewed event on the platform.

Top 3 Most Viewed Live Streams on YouTube

🥇Rank: 1

Youtube Stream: ISRO – Chandrayaan 3 Mission Soft Landing

Peak Real-time Views: 8.06 million

🥈Rank: 2

Youtube Stream: CazéTV – World Cup 2022 QF Brazil vs Croatia

Peak Real-time Views: 6.1 million

🥉Rank: 3

Youtube Stream: CazéTV – Brazil vs. South Korea 2022 World Cup

Peak Real-time Views: 5.2 million

The event that united the 1.4 Billion-strong nation, was watched live on multiple platforms and streams - hence splitting the viewers.

If YouTube had exclusive rights to the stream, then the record could have been at least 3-4x bigger.

I am saying this because another event that united India was the reason for a global record for concurrent viewers on a live stream.

The final of the Indian Premier League 2023 had 32 million viewers watching the match concurrently on the JioCinema platform. This is outlandish.

Global Record For Concurrent Live Stream Views

🥇Rank: 1

Streaming Service and Event: JioCinema, CSK vs. GT IPL 2023 Final

Peak Concurrent Viewers: 32 million

🥈Rank: 2

Streaming Service and Event: Disney Hotstar, 2019 CWC Final between NZ and ENG

Peak Concurrent Viewers: 25.3 million

🥉Rank: 3

Streaming Service and Event: JioCinema, CSK vs. RCB IPL 2023

Peak Concurrent Viewers: 24 million

I cannot stop marveling at the scale at which services like JioCinema, YouTube, or Hotstar+AWS+Akamai are operating.

The engineers who work on these solutions must be so proud to see the world enjoying the fruits of their labors.

Working on problems at this scale is what most engineers ( at least I) yearn for.

So what does it take to build a Live Streaming Service like JioCinema or Hotstar? Let's find out.

Functional Requirements

Stream live sports(or any event) videos to global audiences on any device, any bandwidth, with low latency

Users should be able to like and comment in real-time

Store the entire video for later replay

Non-functional Requirements

There should be no buffering, i.e., streaming without any lag

Reliable system

High Availability

Scalability

Challenges

Latency - keeping the delay as little as possible

Scale - millions of users concurrently watching

Multiple devices - different resolutions and bandwidths

Live Streaming Service for a Single User

There are 4 entities to streaming

Publishing - capturing the live feed

Transforming - converting into multiple formats

Distributing - transporting the video to the client

Viewing - Watching the feed on the UI

Publishing

The first step in the publishing process is to acquire the camera feed. In the case of sporting events, the camera feed is usually backhauled to a central production facility via a satellite link or a dedicated high-speed Internet line.

The popular protocol used to deliver video feed to a streaming server is RTMP.

Real-Time Messaging Protocol (RTMP) is a TCP-based protocol designed to maintain persistent, low-latency connections while transmitting content from the source (encoder) to a hosting server.

The camera feed is pushed to an RTMP Encoder Server through HDMI. The encoder server converts the incoming video to the desired bitrate and codec - usually H.264 for video and AAC audio.

The encoder then sends the encoded video to the cloud server using RTMP.

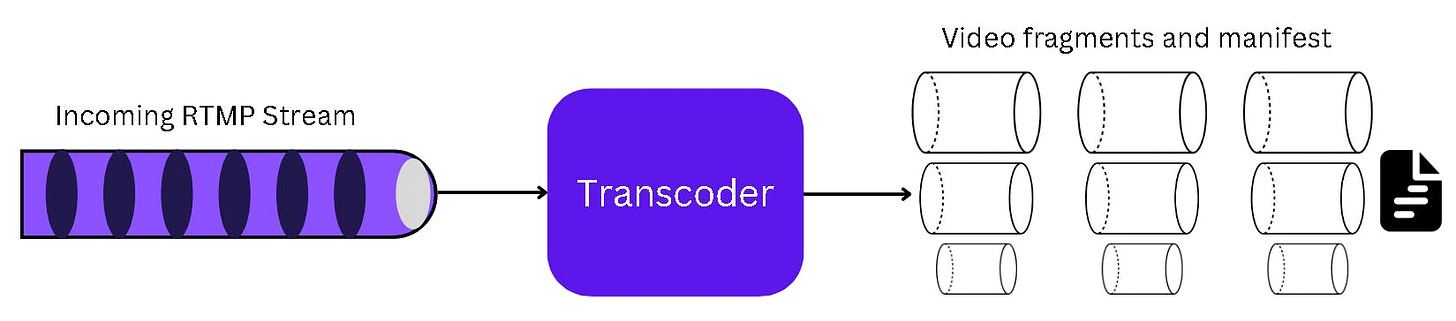

Transforming

One of the challenges in live streaming is streaming to different types of devices like mobiles, desktops, smart TVs, etc. For this, the ingested video, which is usually 8K/4K, is converted into different formats. This process of video conversion is called transcoding.

Essentially, the incoming RTMP stream is chunked into multiple bitrates for adaptive bitrate streaming.

It takes An 8K/4K video input stream and converts it into lower bitrate streams such as HD at 6Mbps, 3Mbps, 1Mbps, 600 kbps, etc.

The streaming server also pushes all the versions of the video to Amazon S3-like storage for later payback.

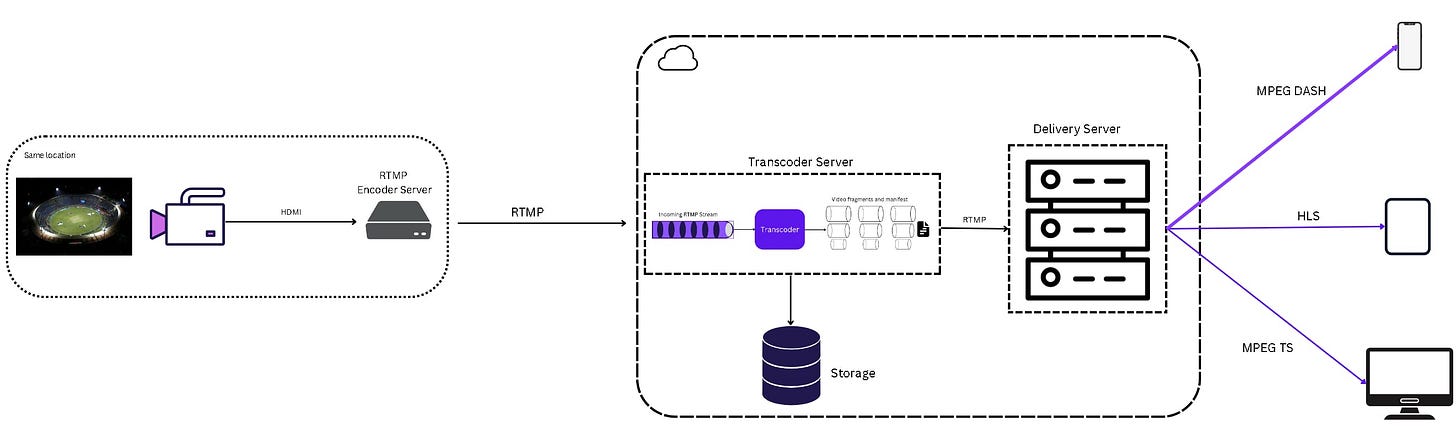

Transporting

The transcoder sends the chunked videos to a Delivery/Media server.

The web player on the client makes a connection with the media server and requests the live video. The media server, which in this case is more like an edge server, responds with the stream of the relevant video format the player requested.

Designing For More Than 2 Crore Concurrent Users

Let's scale this to 20M+ users

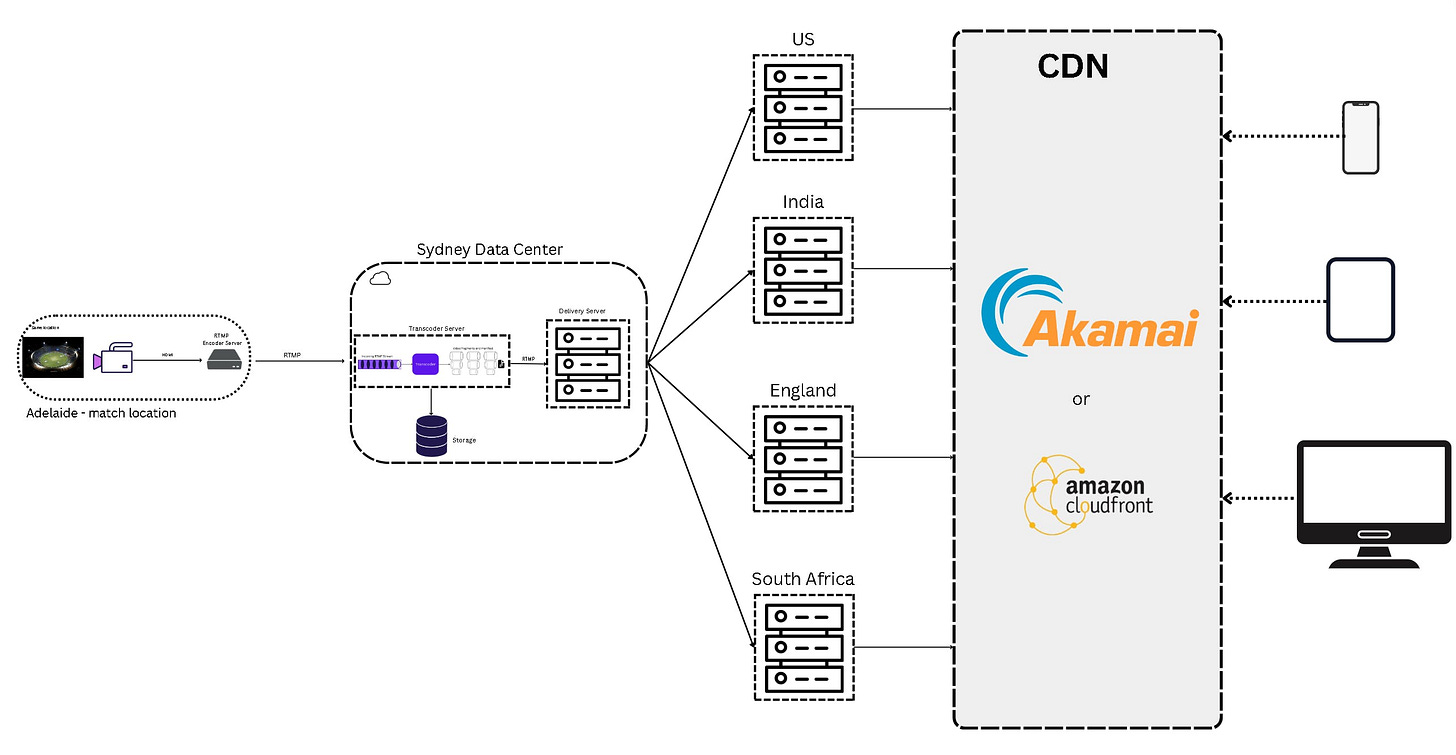

Hypothetical scenario

Event: Cricket Match India vs Pakistan

Location: Adelaide, Australia

Let's focus on 2 workflows

Ingestion: Video Feed to Distribution

Playback: Player to Distribution servers

Ingestion is a CPU-intensive process and for easy streaming over the network, the RTMP stream needs to be converted into one-second chunks each for multiple bitrates.

Let's revisit the Transcoder Server with this illustration

A global sports event streaming company can either own its data centers (DCs) across the globe in strategic locations or outsource the infrastructure to a vendor like AWS.

Either way - all data centers have streaming servers positioned to take in the incoming feed.

The video production team at the Adelaide Cricket Stadium initiates the stream and connects with the streaming server API in the nearest Data Center in Sydney. They get a stream ID from the streaming server.

The servers transcode the video into multiple formats and bitrates and push the chunked videos to multiple host servers of multiple data centers across the globe.

By 'caching' the video segments to multiple DCs, the load on the source server is definitely managed - but this is good enough for a few hundred users only.

But, in a high-stake match like India vs Pakistan, the traffic could surge dramatically at specific moments in the match.

Too many requests, on a few DCs, can stampede the system, causing lag, dropout, and disconnection from the stream.

The “Thundering Herd” problem

This is where CDN comes in.

Instead of having clients connect directly to the live stream server, they are routed to the CDN edge servers.

CDN edge servers can either Pull the data from the source data centers or the data centers can Push the video segments to the CDN servers across the globe.

When a player connects with a CDN server, The segment request is handled by one of the HTTP proxies at the CDN PoP that checks to see whether the segment is already in an edge cache.

If the segment is in the cache, it’s returned directly from there. If not, the proxy issues an HTTP request to the regional DC cache. If the segment is not in the DC cache, then it needs to request to the server handling that particular stream at the origin.

This way 90% of the requests are handled by CDN servers eliminating the load on the client.