How to Unlock System 2 Thinking in Large Language Models?

Read Time: 3 mins 29 secs (Know that your time means a lot to me!)

Hi friend,

My sincere apologies for not publishing an article for a few weeks as I was transitioning into a new role as a Gen AI engineer. I am super excited about sharing all my learnings with you all.

Today, I want to share an interesting topic I came across recently.

'Intro to Large Language Models' by Andrej Karpathy is one of the best explanations about LLMs you will find on the internet today.

For the uninitiated, Andrej was the research scientist and founding member of OpenAI, before joining Tesla as Sr Director of AI working on the Tesla Autopilot, and returning back at OpenAI in 2023. Rest assured he knows what he is talking about.

Andrej brings up an interesting point about how the state-of-the-art LLMs today excel at instinctive tasks, like answering straightforward questions or generating text based on patterns they've learned, but, when faced with more complex queries that require reasoning, they fall short.

Andrej asserts that the LLMs of today have System 1 thinking and fall short on System 2 thinking.

What is System 1 and System 2 thinking?

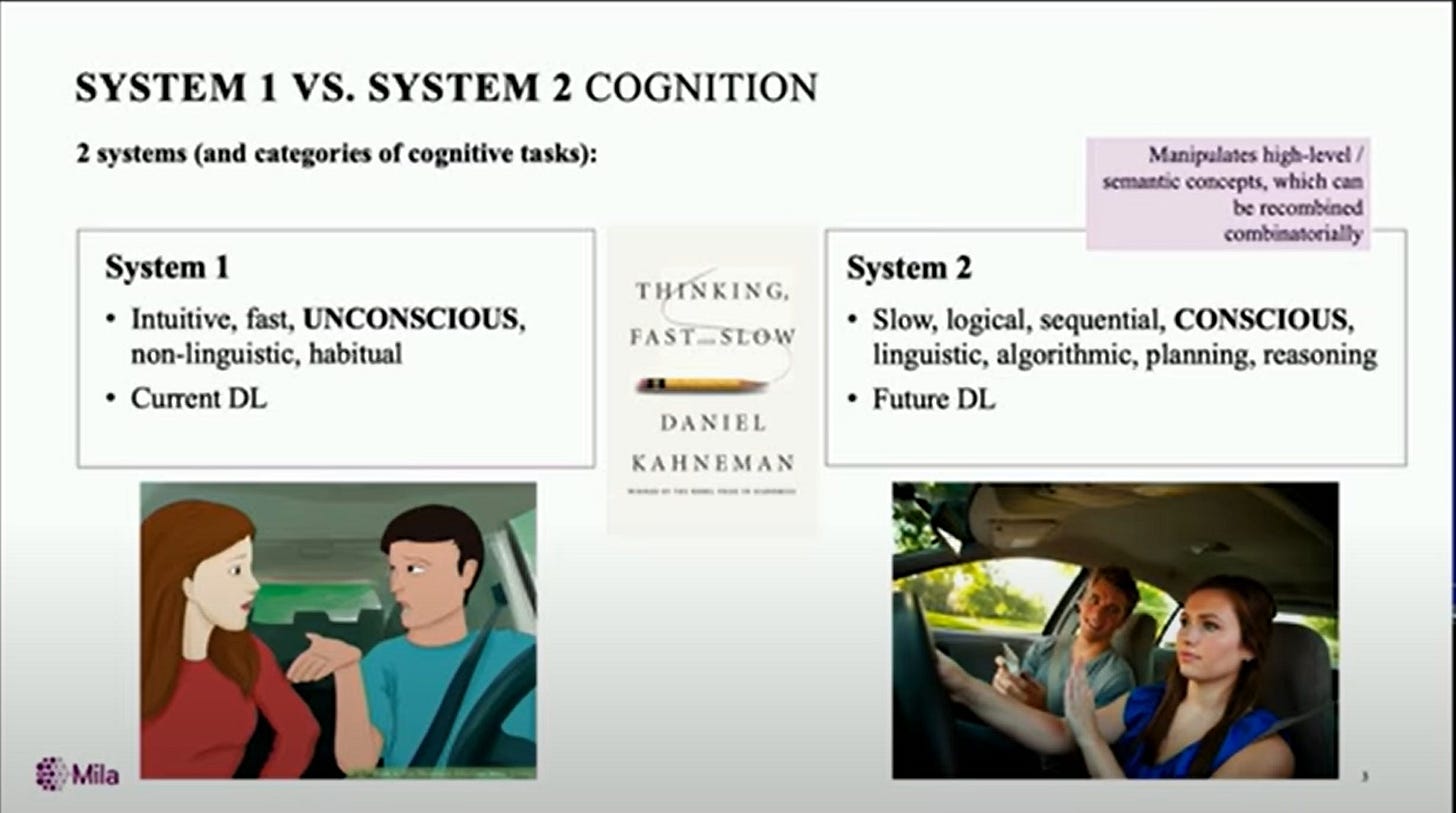

Popularized by Daniel Kahneman in his best seller Thinking, Fast and Slow, “System 1 and System 2” is a model that describes two modes of human decision-making and reasoning.

System 1 thinking is quick, instinctive, and automatic, while System 2 involves more rational, slower decision-making that requires conscious effort.

Canadian computer scientist Yoshua Bengio in a 2019 talk explains this perfectly.

In the scenario of driving home on a familiar route, the process becomes automatic and instinctive—typifying system 1 thinking. When driving to a well-known destination, individuals don't need to pay significant attention to the road; they can engage in conversation or perform other tasks while driving. This is akin to how current deep learning excels in tasks that are habitual and can be performed intuitively.

On the other hand, Yoshua contrasts this with the experience of navigating in a new city. In this unfamiliar setting, individuals need to pay close attention to directions, read road signs, and be actively engaged in the task. If someone attempts to engage in conversation, the driver may ask them to hold off, illustrating the need for conscious and deliberate thinking—characteristic of system two tasks.

The analogy underscores the difference in the depth and consciousness of thinking between routine, automatic actions (system one) and more complex, consciously processed tasks (system two).

Andrej on Thinking, System 1/2

Large language models, including the widely-known GPT-3, operate as if they are on a predetermined track, processing words in sequence with each "chunk" taking the same amount of time.

This limitation confines them to system one thinking, preventing them from simulating the nuanced, thoughtful process associated with system two.

Andrej emphasizes that researchers and developers aim to imbue these language models with system two capabilities.

The vision involves allowing users to present questions and problems to AI models with the luxury of time—30 minutes instead of instant responses.

This shift would enable the model to engage in a more deliberate and conscious thought process, akin to a human systematically exploring a problem, considering various angles, and arriving at a well-thought-out conclusion.

Challenges in LLMs' System 2 Thinking Capabilities

LLMs, as pattern recognition systems, may not be naturally suited for System 2 tasks. They currently lack internal reasoning mechanisms and the ability to engage in independent reasoning

Additionally, they may rely on System 1 thinking, which can lead to errors in judgment and decision-making.

Yoshua Bengiom emphasizes the need to improve out-of-distribution generalization and transfer learning. The challenge lies in enabling deep learning models to perform well on new tasks with minimal data, akin to how humans can quickly adapt to unfamiliar situations or learn new concepts.

You can watch the full talk below.

Potential solutions

I found this blog post where the authors (some prominent minds AI) discuss approaches to enhance System 2 thinking capabilities in LLMs.

Some that I found interesting are...

Integration of Symbolic Approaches: Historically, cognitive architectures focused on symbolic approaches for knowledge, reasoning, planning, and constraint satisfaction. The challenge today is that these methods, while providing sound inference, don't easily align with language, hindering their use by current large language models (LLMs). The solution lies in integrating symbolic approaches with neural architectures.

Neural-Symbolic Integration: A growing body of research is exploring the integration of neural and symbolic architectures. This involves adding mathematical and physical reasoning to language, as exemplified by Stephen Wolfram's work. Calls for integrating knowledge graphs with language models, such as those by Denny Vrandečić, and incorporating explicit knowledge and rules of thumb, as suggested by Doug Lenat, indicate a shift toward more sophisticated, hybrid approaches.

Knowledge Representation Enhancement: Researchers propose enhancing language models by integrating knowledge graphs and more sophisticated knowledge representation schemes. Tom Dietterich advocates for a research arc starting from integrating knowledge graphs into language models, recognizing that a purely System 1 approach is insufficient for advanced competencies.

Hybrid Architectures: Examples from various domains, including AlphaGo and self-driving cars, showcase successful implementations of hybrid architectures. AlphaGo, for instance, employs a combination of deep policy networks and tree search. In self-driving cars, symbolic representations of traffic laws are crucial for precision. The common thread is that hybrid architectures, combining symbolic representations with neural networks, are more effective for building System 2 competencies.

Conclusion

While this capability is not yet realized in existing language models, it represents an exciting frontier in AI research. The prospect of merging the efficiency of system one thinking with the depth of system two thinking could revolutionize how we interact with AI, allowing for more thoughtful and nuanced conversations with these intelligent systems.