The Most In-Demand Hardware in The World; Introduction to Database Storage Engines

Read Time: 4 mins 55 secs (Know that your time is invaluable to me!)

Hey friend!

Today we’ll be talking about

The Most In-Demand Hardware in The World!

The Context

What is H100?

What Makes H100 So Special?

Database Series: DB Engines and Tradeoffs

The Choice of The Right Database

The Most In-Demand Hardware in The World!

The Context

Ancient civilisations hunted for spice; in the 20th century we fought wars for oil. In 2023, the world’s most precious commodity is an envelope-sized computer chip.

I couldn’t have come up with a better intro than The Telegraph. It perfectly sums up the current scenario.

Despite a price tag of $40000, even superrich countries and companies are struggling to get their hands on it.

Demand for the H100 is so great that some customers are having to wait as long as six months to receive it.

I was of the opinion that AI has evolved at break-neck speed in the last 8 months, apparently, it’s slower - it could have been even faster.

So, what is this H100?

What is the H100?

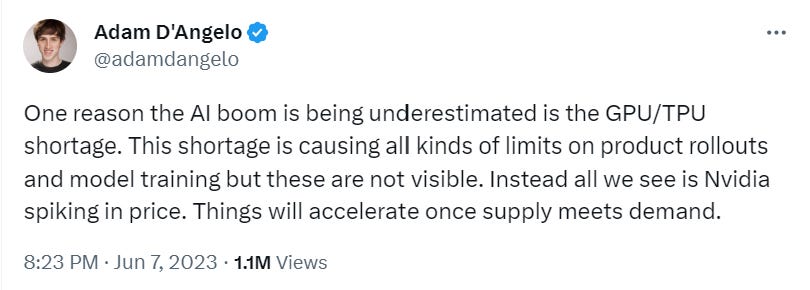

Named after pioneering computer scientist Grace Hopper, The NVIDIA H100 GPU is a hardware accelerator designed for data centers and AI-focused applications.

A GPU is a type of chip that normally lives in PCs and helps gamers get the most realistic visual experience. The H100 GPU unlike a regular GPU is meant for data processing.

Here are some key details about the H100 GPU:

Tensor Cores: The H100 features fourth-generation Tensor Cores, which are specialized hardware units for accelerating AI computations.

Transformer Engine: The H100 GPU includes a Transformer Engine with FP8 precision, which enables up to 4 times faster training compared to the previous generation.

Architecture: The H100 is based on the NVIDIA Hopper architecture, which is the fourth generation of AI-focused server systems from NVIDIA.

Form Factors: The H100 GPU is available in PCIe and SXM form factors.

Multi-Instance GPU: The H100 GPU supports GPU virtualization and can be divided into up to seven isolated instances, making it the first multi-instance GPU with native support for Confidential Computing.

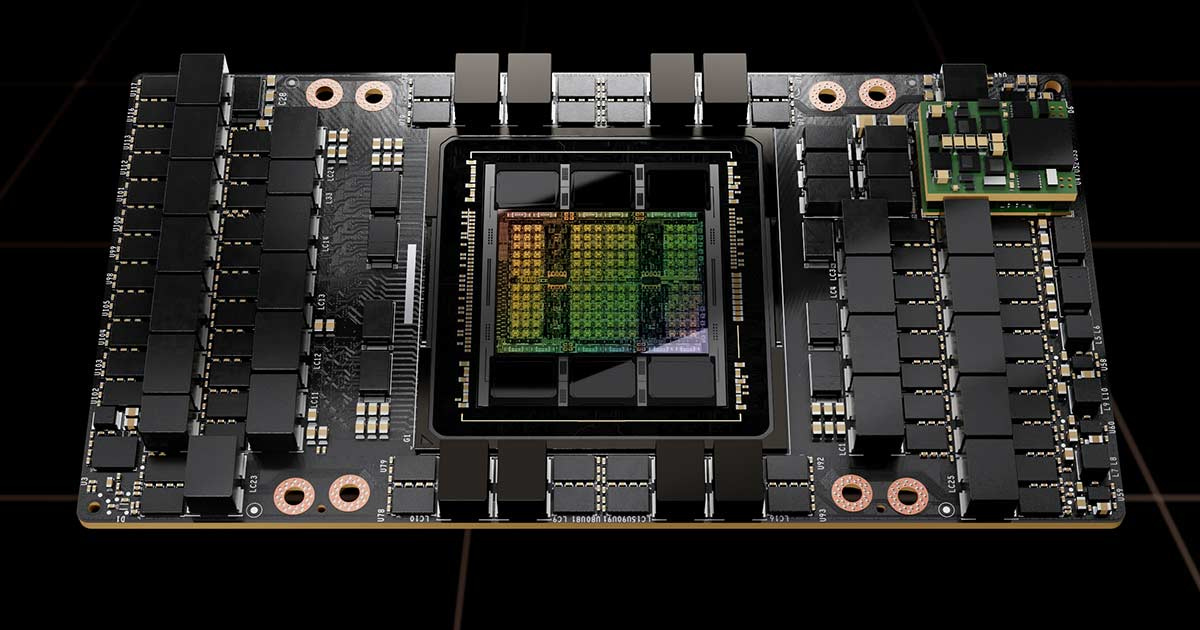

Performance: According to NVIDIA, the H100 is up to nine times faster for AI training and 30 times faster for inference compared to the previous generation A100 GPU.

What Makes H100 So Special?

Powerful Performance

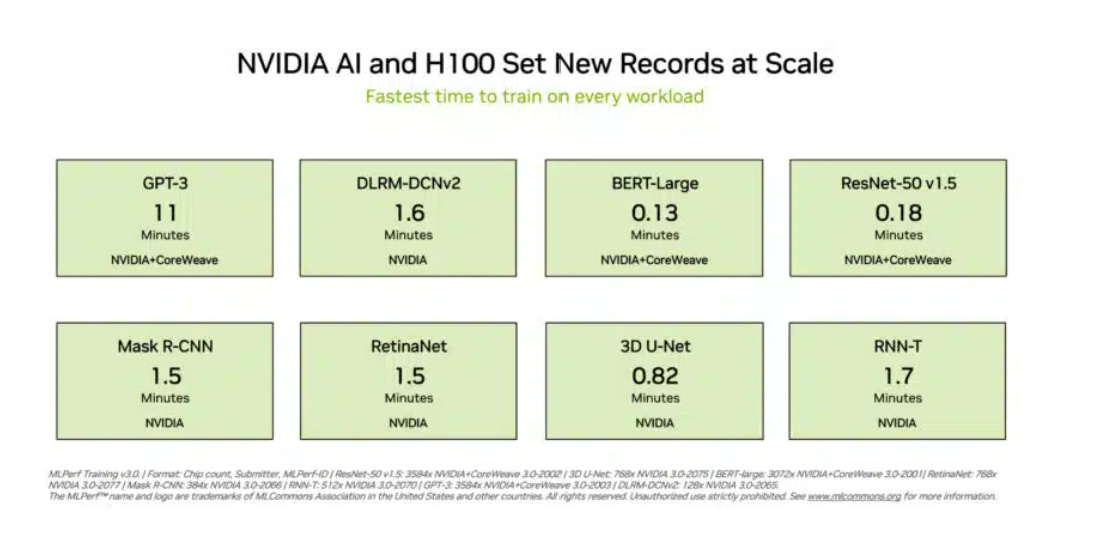

The H100 promises significant performance improvements compared to its predecessors. It can deliver up to 9 times faster AI training and up to 30 times faster AI inference in popular machine-learning applications.

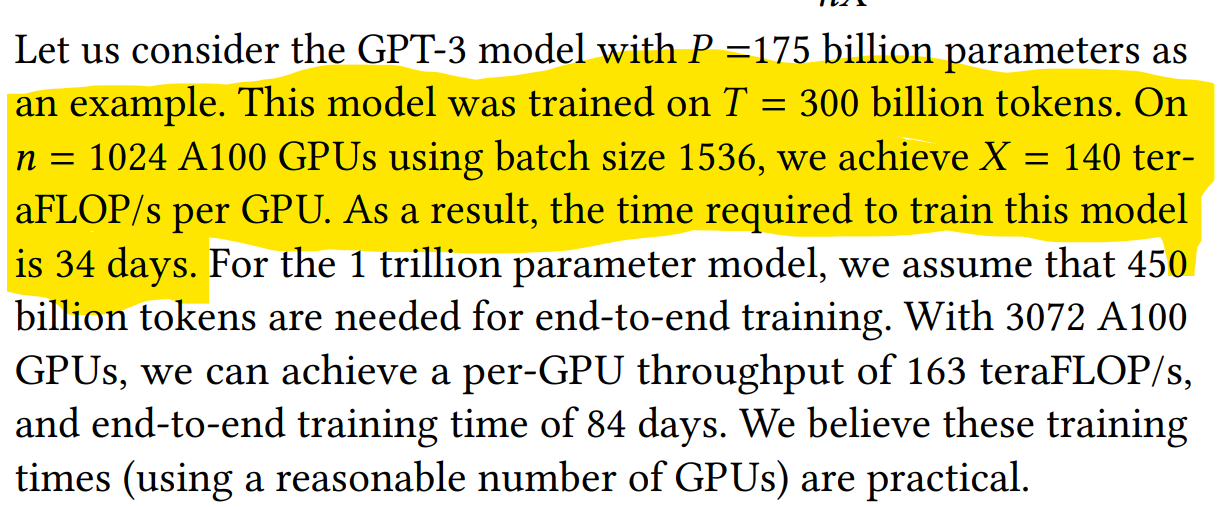

To put things in perspective, GPT-3 was trained with 1024 A100 (H100 predecessor) GPUs in 34 days, while the H100+Codeweaver infrastructure of 3584 GPUs trained GPT-3 in 11 minutes.

Ideal for AI and HPC

The H100 GPU is particularly well-suited for complex AI models and high-performance computing applications. It offers advanced architecture and fourth-generation Tensor Cores, making it one of the most powerful GPUs available.

Best Use Cases (Reference: Lambda Labs)

Big models with high structured sparsity:

H100's performance is great for big models with organized sparsity, especially large language models. Vision models benefit less from the upgrade.

Transformers, commonly used in NLP, are becoming popular in other areas like computer vision and drug discovery due to features like FP8 and Transformer Engine.

Large-scale distributed data parallelization:

H100's new NVLink and NVSwitch tech make communication 4.5x faster in setups with many GPUs across nodes.

This helps a lot in large-scale distributed training where communication between GPUs was slow.

It's useful for various models including language and text2image models, as well as big CNN models.

Model parallelization:

Some complex models don't fit on one GPU, so they need to be split across multiple GPUs or nodes.

H100's NVSwitch boosts performance greatly. For example, running a Megatron Turing NLG model is 30x faster on H100 than on A100 with the same GPUs.

Model quantization:

Making trained models work well with less precision (INT8) is important for real-world applications.

Converting models to INT8 can lead to accuracy loss.

H100 introduces FP8, a new data type that helps maintain accuracy while using less precision, making model quantization easier and more effective.

Database Storage Engines - Part 1

Databases are modular systems and consist of multiple parts:

Transport layer accepting requests

A query processor to determine the most efficient way to run queries

An execution engine carrying out the operations

A storage engine

A storage engine is a software component in a DBMS Architecture that is responsible for storing, retrieving, and managing data in memory or in disk.

MySQL, a DBMS, has several storage engines - including InnoDB, MyISAM, and RocksDB.

The Choice of The Database

When designing systems, the choice of a database is the single most important decision you will make.

So it is important to invest time earlier in the development cycle to decide on a specific database to build confidence.

The best way to do it is to simulate the operations on multiple databases using test data. Operations that are specific to your use case. This is also the best way to find out how active the community is when encountering an issue.

Do not go by industry benchmarks as they can establish bias due to test conditions that you might never encounter. You want to simulate conditions for your use case.

Understand your use case in great detail to identify...

Schema

Potential number of clients

Possible database size

Read-to-Write ratio

With this input, you will know how easy/difficult it is for you to manage your data.

To pick the database for your use case you should be ready to make tradeoffs.

A simple illustration to help you understand tradeoffs.