The Tools‑First Approach to Building Reliable AI Agents

Why deterministic tools, not just prompts, are the foundation for scalable GenAI systems

TL;DR

LLM agents become robust, scalable, and debuggable only when you build, test, and orchestrate deterministic tools first—before prompt crafting. Tools, not prompts, should encode business logic, connect to APIs, and manage data. Start With Tools Not Tasks

Video

Context

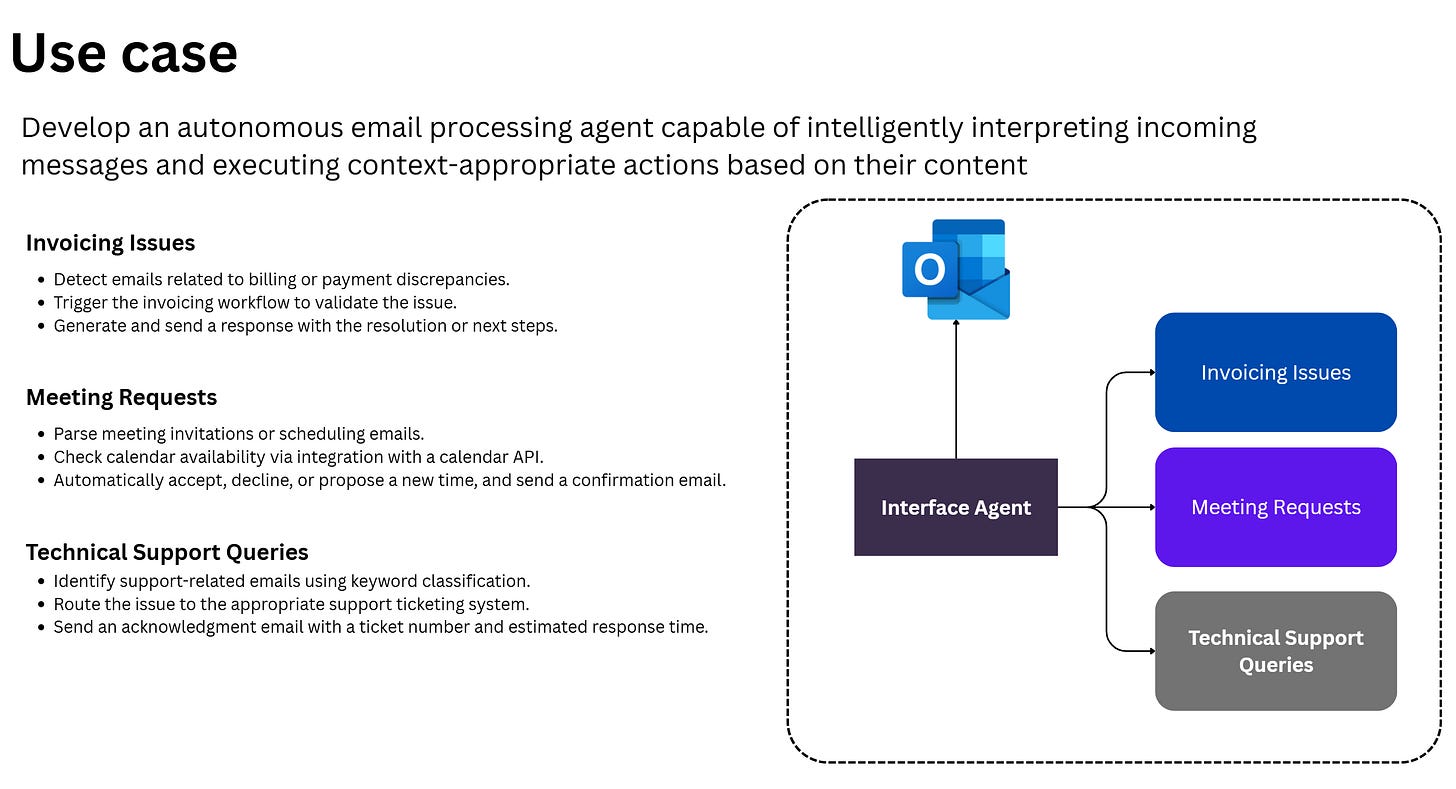

Let’s say you were given a task to build an Email Processing Agent that is capable of parsing incoming emails, understanding the intent, and executing the relevant tasks (like routing, calling downstream tools, and/or responding to the sender).

Below is how you would commonly approach these use cases with agent frameworks like CrewAI/LangGraph?

But should you start with Flow Methods, Nodes and Edges or should you start with the tool?

Most frameworks for building AI agents advise:

“Effective task definition is often more important than model selection.”

This is a great start, but I challenge this orthodoxy. In my experience, when deploying LLM agents for enterprise automation, this advice still puts you at risk of invisible failures and brittle systems.

My premise:

Don’t start with the task. Start with your tool design. Only then define the task and choose the model.

Why? Suppose your tools, the deterministic, testable modules that wrap core business logic and external APIs, aren’t designed and hardened up front. In that case, both task definition and model selection become sources of confusion and fragility.

In the case of the Email Parser Agent, the following can happen

Increased Risk of System-Wide Failures: Untested tools like the Email Classifier or Support Ticket API can cause cascading errors across workflows, disrupting tasks like invoicing or meeting scheduling.

Unreliable Tool Behavior: Unvalidated tools, such as the Calendar API, may mishandle edge cases (e.g., invalid time zones), leading to incorrect workflow outcomes.

Complex Debugging and Maintenance: Building workflows first obscures whether errors stem from tools, LLMs, or orchestration, complicating debugging in CrewAI or LangGraph.

Poor Handling of Edge Cases: Untested tools like the Email Classifier may fail on ambiguous inputs, breaking workflows like Technical Support Queries.

Over-Reliance on LLMs: Without tools like the Invite Parser, workflows rely on unpredictable LLMs for deterministic tasks, reducing reliability.

Scalability Challenges: Unoptimized tools (e.g., Support Ticket API) may fail under high load, causing bottlenecks in high-volume email processing.

Prompts can impress, effective tasks may clarify, but only robust tools make agents truly reliable.

So:

Start by designing and hardening your tools. Make each unit deterministic, observable, and testable.

Then:

Define the agent’s task in terms of tool orchestration—not raw prompting. Only at this point does model selection add real value.

“Build your tools first. Then the task. Then the prompt. That’s where scalable, production-ready AI starts.”

This guide provides you with a practical blueprint:

Why “tools-first”? How does it differ from standard “task-first” or “prompt-first” wisdom?

What, exactly, is a tool—and why is it the most controllable, reliable artifact in your stack?

How do you design, validate, and orchestrate tools for enterprise-ready agents?

Let’s Start with Why! Why a Tools-First Approach?

Tools are the deterministic components of an AI agent’s architecture.

Unlike LLMs, which generate responses based on probabilities, tools are explicitly coded to perform specific tasks, such as fetching data from APIs, performing calculations, or interacting with databases.

By prioritizing tool development, you create a solid foundation for your AI agent, ensuring that the most predictable parts of your system are reliable before introducing the variability of LLMs.

Benefits of the Tools-First Approach

Deterministic Behavior: Tools execute predefined logic, making their behavior predictable and testable.

Modularity: Well-designed tools are reusable across multiple agents or workflows.

Debugging Simplicity: Isolating tools from LLMs allows you to test and debug them independently, reducing complexity.

Reliability: Thoroughly tested tools minimize runtime errors when integrated with LLMs.

Scalability: Robust tools can handle edge cases and scale with increased usage.

Security: Keeps critical validation logic out of fragile prompts, reducing prompt injection risks.

Faster Iteration: Tools can be developed, tested, and deployed independently.

Common Pitfalls of Skipping Tool Development

Over-Reliance on LLMs: Developers sometimes use LLMs to handle tasks better suited for deterministic tools, leading to inconsistent results.

Untested Tools: Rushing to integrate untested tools with LLMs can cause cascading failures.

Poor Error Handling: Without proper tool validation, errors propagate through the agent, making debugging difficult.

What Is the “Tools-First” Approach?

Definition

A tool in agent systems is a deterministic, testable unit of logic encapsulating business operations, integrations, or API calls. Think:

API connectors (CRM, weather, finance)

Business logic/calculators

Document parsers

Database queries

Integration wrappers (Slack, Jira)

A tool is deterministic code. Same input, same output; wrapping real business logic or external actions, callable by the agent.

LLM-First vs Tools-First

Core Tool Properties

Deterministic: Same input → same output

Composable: Reusable across agents and workflows

Testable: Unit tests and mocks are doable in isolation

Key Principle

Write, test, and harden your tools first. Only then orchestrate with LLMs.

Best Practices for Tool Development

To maximize the effectiveness of the Tools-First Approach, follow these best practices when designing, writing, and testing tools:

Define Clear Specifications: Document the tool’s purpose, inputs, outputs, and error conditions. Use type hints (e.g., Python’s typing module) to enforce input/output contracts.

Implement Comprehensive Logging: Use structured logging to capture tool execution details, inputs, outputs, and errors for debugging and monitoring.

Enable Tracing: Integrate tracing (e.g., OpenTelemetry, Langfuse) to track tool execution in distributed systems, especially when tools interact with external services.

Handle Errors Gracefully: Anticipate edge cases and implement robust error handling with meaningful error messages.

Write Unit Tests: Achieve near-100% test coverage to ensure the tool behaves correctly under all conditions.

Validate Inputs and Outputs: Use schemas (e.g., Pydantic) to validate data entering and leaving the tool.

Optimize Performance: Profile the tool to ensure it performs efficiently, especially for high-frequency tasks.

Document the Tool: Provide clear documentation for other developers or agents to understand and use the tool.

Version Control: Use semantic versioning for tools to manage updates and dependencies.

Implementation

Below is the complete implementation of the Weather API Tool, incorporating best practices.

import requests

from typing import Dict, Any

from pydantic import BaseModel, Field, ValidationError

import structlog

from opentelemetry import trace

from opentelemetry.trace import Span

import os

from requests.exceptions import RequestException

from http import HTTPStatus

# Initialize structured logger

logger = structlog.get_logger()

# Initialize OpenTelemetry tracer

tracer = trace.get_tracer(__name__)

# Input schema

class WeatherInput(BaseModel):

city: str = Field(..., min_length=1, description="Name of the city")

api_key: str = Field(..., min_length=1, description="OpenWeatherMap API key")

# Output schema

class WeatherOutput(BaseModel):

temperature: float = Field(..., description="Temperature in Celsius")

condition: str = Field(..., description="Weather condition (e.g., Clear)")

humidity: int = Field(..., ge=0, le=100, description="Humidity percentage")

class WeatherAPIError(Exception):

"""Custom exception for Weather API errors."""

pass

class WeatherTool:

"""A tool to fetch current weather data for a given city."""

def __init__(self, base_url: str = "http://api.openweathermap.org/data/2.5/weather"):

self.base_url = base_url

@tracer.start_as_current_span("fetch_weather")

def fetch_weather(self, input_data: WeatherInput) -> WeatherOutput:

"""

Fetch weather data for the specified city.

Args:

input_data: WeatherInput object containing city and API key.

Returns:

WeatherOutput object with temperature, condition, and humidity.

Raises:

WeatherAPIError: If the API request fails or returns invalid data.

"""

span: Span = trace.get_current_span()

span.set_attribute("city", input_data.city)

logger.info("Fetching weather data", city=input_data.city)

try:

# Make API request

params = {

"q": input_data.city,

"appid": input_data.api_key,

"units": "metric" # Use Celsius

}

response = requests.get(self.base_url, params=params, timeout=5)

span.set_attribute("http.status_code", response.status_code)

# Check for HTTP errors

if response.status_code != HTTPStatus.OK:

error_msg = f"API request failed: {response.status_code} - {response.text}"

logger.error(error_msg)

raise WeatherAPIError(error_msg)

# Parse response

data = response.json()

weather_data = self._parse_weather_data(data)

logger.info("Weather data fetched successfully", city=input_data.city)

return weather_data

except RequestException as e:

error_msg = f"Network error: {str(e)}"

logger.error(error_msg, exc_info=True)

span.record_exception(e)

raise WeatherAPIError(error_msg)

except ValidationError as e:

error_msg = f"Invalid response data: {str(e)}"

logger.error(error_msg, exc_info=True)

span.record_exception(e)

raise WeatherAPIError(error_msg)

except Exception as e:

error_msg = f"Unexpected error: {str(e)}"

logger.error(error_msg, exc_info=True)

span.record_exception(e)

raise WeatherAPIError(error_msg)

def _parse_weather_data(self, data: Dict[str, Any]) -> WeatherOutput:

"""

Parse raw API response into WeatherOutput schema.

Args:

data: Raw JSON response from the API.

Returns:

WeatherOutput object.

Raises:

ValidationError: If the response data is invalid.

"""

return WeatherOutput(

temperature=data["main"]["temp"],

condition=data["weather"][0]["main"],

humidity=data["main"]["humidity"]

)

import asyncio

from pydantic import BaseModel

from agents import Agent, Runner, function_tool

from tools import WeatherTool, WeatherInput, WeatherOutput

from dotenv import load_dotenv

load_dotenv()

@function_tool

def get_weather(weather_input: WeatherInput) -> WeatherOutput:

print("[debug] get_weather called")

weather_tool = WeatherTool()

return weather_tool.fetch_weather(weather_input)

agent = Agent(

name="Hello world",

instructions="You are a helpful agent.",

tools=[get_weather],

)

async def main():

result = await Runner.run(agent, input="What's the weather in Tokyo?")

print(result.final_output)

# The weather in Tokyo is sunny.

if __name__ == "__main__":

asyncio.run(main()){

"name": "fetch_weather",

"trace_id": "9a3a1ee00e4151e3c9e49a2127f8baab",

"span_id": "1b66ffcf7ab68586",

"attributes": {

"city": "Tokyo",

"http.status_code": 401

},

"status": "ERROR",

"start_time": "2025-07-27T12:46:40.463738Z",

"end_time": "2025-07-27T12:46:40.527270Z",

"events": [

{

"name": "exception",

"timestamp": "2025-07-27T12:46:40.521267Z",

"attributes": {

"exception.type": "tools.WeatherAPIError",

"exception.message": "API request failed: 401 - {\"cod\":401, \"message\": \"Invalid API key. Please see https://openweathermap.org/faq#error401 for more info.\"}",

"exception.stacktrace": "Traceback (most recent call last):\n File \"C:\\Users\\Zahiruddin_T\\Documents\\LocalDriveProjects\\ToolFirstApproachForAgents\\src\\tools.py\", line 85, in fetch_weather\n raise WeatherAPIError(error_msg)\ntools.WeatherAPIError: API request failed: 401 - {\"cod\":401, \"message\": \"Invalid API key. Please see https://openweathermap.org/faq#error401 for more info.\"}\n",

"exception.escaped": "False"

}

},

{

"name": "exception",

"timestamp": "2025-07-27T12:46:40.527270Z",

"attributes": {

"exception.type": "tools.WeatherAPIError",

"exception.message": "Unexpected error: API request failed: 401 - {\"cod\":401, \"message\": \"Invalid API key. Please see https://openweathermap.org/faq#error401 for more info.\"}",

"exception.stacktrace": "Traceback (most recent call last):\n File \"C:\\Users\\Zahiruddin_T\\Documents\\LocalDriveProjects\\ToolFirstApproachForAgents\\src\\tools.py\", line 85, in fetch_weather\n raise WeatherAPIError(error_msg)\ntools.WeatherAPIError: API request failed: 401 - {\"cod\":401, \"message\": \"Invalid API key. Please see https://openweathermap.org/faq#error401 for more info.\"}\n\nDuring handling of the above exception, another exception occurred:\n\nTraceback (most recent call last):\n File \"c:\\Users\\Zahiruddin_T\\Documents\\LocalDriveProjects\\ToolFirstApproachForAgents\\.venv\\Lib\\site-packages\\opentelemetry\\trace\\__init__.py\", line 589, in use_span\n yield span\n File \"c:\\Users\\Zahiruddin_T\\Documents\\LocalDriveProjects\\ToolFirstApproachForAgents\\.venv\\Lib\\site-packages\\opentelemetry\\sdk\\trace\\__init__.py\", line 1105, in start_as_current_span\n yield span\n File \"C:\\Python3127\\Lib\\contextlib.py\", line 81, in inner\n return func(*args, **kwds)\n ^^^^^^^^^^^^^^^^^^^\n File \"C:\\Users\\Zahiruddin_T\\Documents\\LocalDriveProjects\\ToolFirstApproachForAgents\\src\\tools.py\", line 109, in fetch_weather\n raise WeatherAPIError(error_msg)\ntools.WeatherAPIError: Unexpected error: API request failed: 401 - {\"cod\":401, \"message\": \"Invalid API key. Please see https://openweathermap.org/faq#error401 for more info.\"}\n",

"exception.escaped": "False"

}

}

]

}

Full Code

https://github.com/zahere-dev/tool-first-approach-to-building-agents

Summary: Think Like a Systems Engineer

References & Further Reading

Beginner:

Intermediate:

Advanced:

Bottom Line

In modern AI agent systems, tools are code, LLMs are orchestration glue. Harden your tools first. Let LLMs coordinate. That’s how you build agents that scale, adapt, and never leave you debugging in the dark.